3. Coding and Musical Gesture

In this chapter we examine an often overlooked possibility of mobile touchscreen computing, that of text-based music software programming. To this end, we have designed and implemented an iPad application for real-time coding and performance in the ChucK music programming language [3]. This application shares much of its design philosophy, source code, and visual style with the miniAudicle editor for desktop ChucK development [1], and thus we call it miniAudicle for iPad.

Motivation and Principles

The motivation behind miniAudicle for iPad is to provide a satisfactory method for creation and editing of ChucK code and to fully leverage the interaction possibilities of mobile touchscreen devices. The overriding design philosophy was not to transplant a desktop software development environment to a tablet, but to consider what interactions the tablet might best provide for us.

Firstly we note that it is unreasonable to completely discard the text-input metaphor, that of typing code into an editor. The fundamental unit of ChucK programming is text. For these reasons we have sought to create the best code editing interface we could for a touchscreen device. Typing extensive text documents on touchscreens is widely considered undesirable. However, using a variety of popular techniques like syntax highlighting, auto-completion, and extended keyboards, we can optimize this experience. With these techniques, the number of keystrokes required to enter code is significantly reduced, as is the number of input errors produced in doing so. Additional interaction techniques can improve the text editing experience beyond what is available on the desktop. For example, one might tap a unit generator typename in the code window to bring up a list of alternative unit generators of the same category (e.g. oscillators, filters, reverbs). Tapping a numeric literal could bring up a slider to set the value, where a one-finger swipe adjusts the value and a two finger pinch changes the granularity of those adjustments.

Secondly, we believe that live-coding performance is a fundamental aspect of computer music programming, and contend that the mobile touchscreen paradigm is uniquely equipped to support this style of computing. Live-coding often involves the control and processing of many scraps of code, with multiple programs interacting in multiple levels of intricacy. Direct manipulation, the quintessential feature of a multitouch screen, might allow large groups of “units” — individual ChucK scripts — to be efficiently and rapidly controlled in real-time. This is the basis of miniAudicle for iPad’s Player mode, in which a user assembles and interacts with any number of ChucK programs simultaneously.

Lastly, we are interested in the physicality of the tablet form-factor itself. The iPad’s hardware design presents a number of interesting possibilities for musical programming. For instance, it is relatively easy to generate audio feedback by directing sound with one’s hand from the iPad’s speaker to its microphone. A ChucK program could easily tune this feedback to musical ends, while the user maintains manual control over the presence and character of the feedback. The iPad contains a number of environmental sensors, including an accelerometer, gyroscope, and compass. ChucK programs that incorporate these inputs might use them to create a highly gestural musical interaction, using the tablet as both an audio processor and as a physical controller.

Design

Our approach to these goals is to provide two complementary modes: Editor mode and Player mode. Editor mode aims to provide the best code editor possible given the limitations of typing text on a touchscreen. Player mode allows users to play and modify scripts concurrently using ChucK’s intrinsic on-the-fly programming capabilities. It aims to enable multitouch live-coding and performance techniques that would be difficult or impossible on traditional desktop computers. We believe the combination of these systems makes miniAudicle for iPad a compelling mobile system for music programming and live coding.

Interaction in miniAudicle for iPad is divided between these primary modes, described individually below. Several interface elements are common to both modes. First of these is a script browser which allows creating, managing, and selecting individual ChucK programs to load into either mode. Views of ChucK’s console output (such as error messages and internal diagnostics) and a list of the ChucK shreds (processes) running in the system are available from the main application toolbar. A settings menu can also be found here, and allows for turning on audio input, adjusting the audio buffer size, enabling adaptive buffering,1 and turning on background audio, which will cause ChucK programs to continue running in the background when other programs are run on the iPad. This toolbar also contains a switch to toggle between Editor and Player modes.

Editor

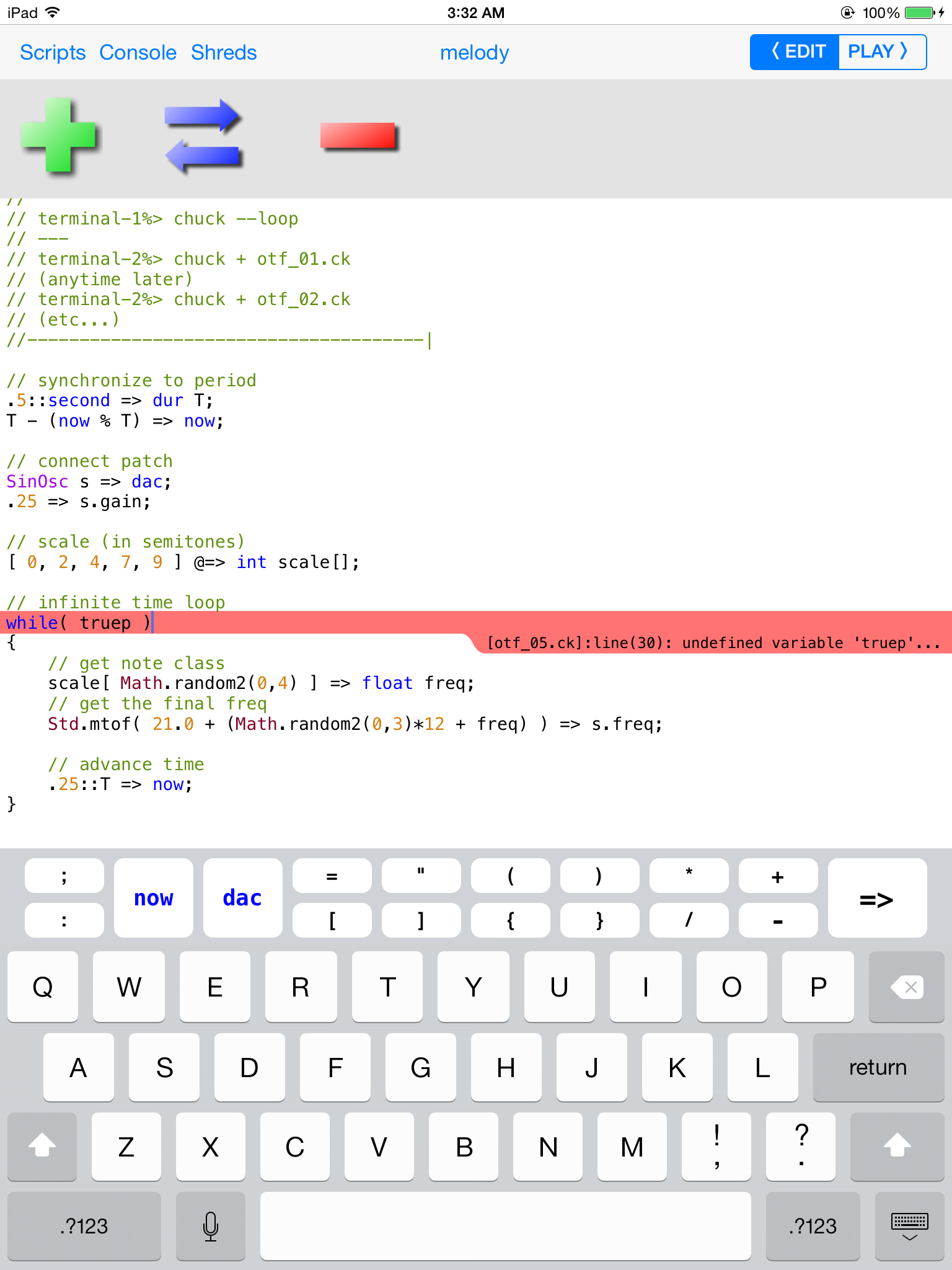

Editor mode is the primary interface for writing and testing ChucK

code. This mode is centered around a touch-based text editing view, in

which a single ChucK source document is presented at a time (Figure 1.1). The document to edit can be

changed via the script browser. Once a document is loaded, the text view

provides a number of features common to programming text editors, such

as syntax-based text coloring and integrated error reporting.

Additionally, the on-screen keyboard has been supplemented with

ChucK-specific keys for characters and combinations thereof that appear

frequently in ChucK programs. These additional keys include the chuck

operator (=>) and its variants, mathematical operators,

a variety of brace characters, additional syntax characters, and the

now/dac keywords. In cases where a specific

key has variants, pressing and holding the key will reveal a menu for

selecting the desired variant; for instance, the => key

can be pressed and held to access keys for the <=,

<<, =<, and @=>

operators. This mode also features buttons for adding, replacing, and

removing the currently edited ChucK script, enabling a small degree of

on-the-fly programming and performance capabilities.

Player

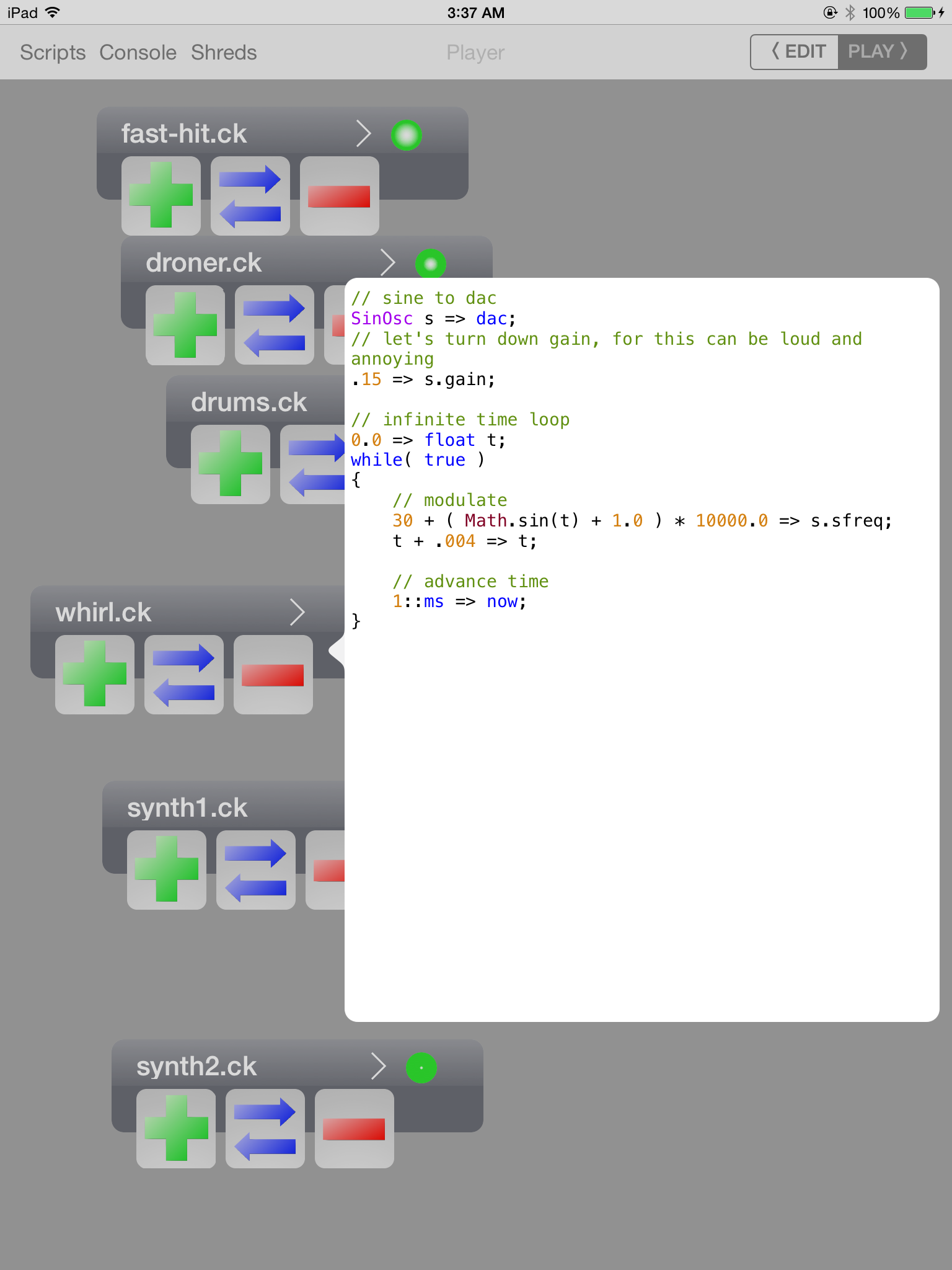

Player mode is designed for live performance, on-the-fly programming, and real-time musical experimentation. In this mode, selected ChucK scripts are displayed as small tabs in a large open area (Figure 1.2). The script tabs can be rearranged in the space by moving them with touch, with new tabs created via the script browser. Each tab has prominent buttons to add the script the virtual machine, replace it, and remove it, enabling basic on-the-fly programming of each script in the player (Figure 1.3).2 A script can be added multiple times, and currently running scripts are visualized by one or more glowing dots on that script’s tab. Pressing a dot removes the iteration of the script that that dot represents. An arrow button on the tab pops open a mini-editor for that script, from which the full editor mode can also be opened if desired. Pressing and holding any tab outside of the button areas will cause delete buttons to appear above all tabs, a common interaction for deleting items from collections in touchscreen apps.

The design of Player mode is theoretically not limited to the touchscreen environment; one can easily imagine a similar design for ChucK or miniAudicle on desktop computers, using mouse-and-keyboard interaction.3 However, touchscreens allow a level of direct, simultaneous interaction with Player mode that is not feasible using keyboard-and-mouse. Using touch control, multiple scripts can be fired off or removed instantly, different combinations of shreds can be quickly configured and evaluated, and multiple edited scripts can be replaced on-the-fly in tandem. These sorts of interactions are not impossible in mouse-and-keyboard environments, but typically are held back by the sluggishness of mouse navigation, or require the use of arcane key command sequences. Therefore, miniAudicle for iPad’s Player mode might offer distinct improvements over certain desktop-based systems for interactive music programming.

ChucK Mobile API

An additional goal of miniAudicle for iPad is to allow ChucK programmers to map the various sensors of the iPad to sound. Presently, the standard iPad hardware has accelerometer, gyroscope, and magnetic (compass) sensors. Geographic location is also provided via Wifi positioning and in some models the Global Positioning System (GPS). The ChucK Mobile Application Programming Interface (API) makes this spatial data available to ChucK programs running on miniAudicle for iPad.

The ChucK Mobile API consists of a single ChucK class called

Motion, an instance of which is configured to provide the

desired sensor and/or location data. An example is provided in Listing

[listing:coding:Motion]. The

overall API resembles how the MidiIn, Hid, and

OscIn classes are used in ChucK; the use of

Motion should be familiar to ChucK programmers who have

worked with those preexisting classes. First, a simple audio patch is

created, and then an instance of Motion and an instance of

MotionMsg are declared. The open function is

called with an argument of Motion.ACCEL to indicate that

accelerometer data is desired and should be sent to the program. Other

options here are Motion.GYRO (gyroscope data),

Motion.MAG (magnetometer), Motion.ATTITUDE

(computed attitude based on sensor fusion), Motion.HEADING

(compass heading), and Motion.LOCATION (geographic latitude

and longitude). Any combination of these can be opened simultaneously in

a given Motion instance, allowing a single ChucK program to

work with multiple sensor input streams concurrently. After opening the

device, the Motion instance is chucked to now,

which suspends the shred until new data has been received. This data is

then read into the MotionMsg and the message type is

checked against the desired type (e.g. Motion.ACCEL), as

multiple message types will be received if multiple input sources have

been opened. Lastly, the x and

y acceleration values are

mapped to parameters of the audio patch.

SawOsc s => LPF filter => dac;

7 => filter.Q;

Motion mo;

MotionMsg momsg;

if(!mo.open(Motion.ACCEL))

{

cherr <= "unable to open accelerometer" <= IO.nl();

me.exit();

}

while(true)

{

mo => now;

while(mo.recv(momsg))

{

if(momsg.type == Motion.ACCEL)

{

Std.scalef(momsg.x, -1, 1, 200, 1000) => s.freq;

Std.scalef(momsg.y, -1, 1, s.freq(), s.freq()*10)

=> filter.freq;

}

}

}This interface has been designed with portability in mind, so it need

not be tied to sensors specific to iPad. open() returns

false upon failure, which allows programs to determine if

the desired sensor is available on the device its running on. The motion

and location sensors made available by the ChucK Mobile API are often

present across a number of other consumer tablet and phone devices in

addition to the iPad. If miniAudicle for iPad were to be developed for a

different tablet OS or for mobile phones, ChucK programs written using

the ChucK Mobile API would conceivably run and use any available sensors

without modification.

Using the ChucK Mobile API, ChucK programmers can readily experiment with motion-based interfaces to sound synthesis. The ability to quickly mockup and evaluate these interactions directly on the device is a distinguishing characteristic of developing code on the device.

OSC and MIDI

The version of ChucK embedded into miniAudicle for iPad includes ChucK’s standard support for Open Sound Control and MIDI input and output. Therefore, programs running in miniAudicle for iPad can be used as the source of musical control data sent over either protocol, or as the recipient of data generated by other programs. For instance, one might use TouchOSC as a controller for a ChucK-based synthesis patch running in miniAudicle for iPad by directing TouchOSC’s output to localhost, and ensuring the correct port is used in both programs. Another example is a generative composition, programmed in ChucK, that sends note data to the virtual MIDI port of a synthesizer running on the iPad. To support these usages, the “Background Audio” option needs to be enabled in miniAudicle for iPad’s settings menu.

Implementation

miniAudicle for iPad uses standard iOS libraries including UIKit, the standard user interface framework. Leveraging a preexisting user interface library has enabled rapid development and prototyping, ease of long-term software maintenance, and design consistency with iOS and other iOS apps.

The main text editing interface is a subclass of the standard

UITextView that has been supplemented with several

enhancements. This subclass, mATextView, has been modified

to draw line numbers in a sidebar to the left of the text itself.

Additionally, mATextView highlights error-causing lines in

red. A given mATextView is managed by a

mAEditorViewController, a common convention in UIKit and

other software applications that follow the model-view-controller (MVC)

pattern. The mAEditorViewController is responsible for

other aspects of the editing interface, including syntax-based coloring

of text and presenting inline error messages.

miniAudicle for iPad integrates directly with the core ChucK

implementation in a similar fashion as the desktop edition of

miniAudicle. A C++ class simply named miniAudicle

encapsulates the rather intricate procedures for managing and

interacting with an instance of the ChucK compiler and virtual machine.

An instance of the miniAudicle class handles configuration,

starting, and stopping of the VM, and can optionally feed audio input

and pull audio output from the VM. It also manages loading

application-specific plugins (such as those used by the ChucK Mobile API

described above),

adding, replacing, and removing ChucK scripts to the VM, and retrieving

VM status and error information.

Bespoke modifications to the core ChucK virtual machine were also

made to support certain features that were felt to be necessary in

miniAudicle for iPad. For instance, ChucK’s logging system, debug print

(<<< >>> operator), and

cherr and chout output objects were modified

to redirect to a custom destination instead of the default C

stdout/stderr. This is used to direct ChucK printing and logging to the

Console Monitor. Code changes were added to allow for statically

compiling “chugins” (ChucK plugins) [2] directly into the application binary

rather than dynamically loading these at run time. Several minor code

fixes were also needed to allow ChucK’s existing MIDI engine to be used

on iOS.

Summary

miniAudicle for iPad considers how one might code in the ChucK music programming language on a medium-sized mobile, touchscreen device. While unable to abandon the textual paradigm completely, some functionality is provided to overcome the challenges created by an on-screen virtual keyboard. The application includes a separate “Player Mode” to better take advantage of the multitouch aspect of the iPad and enable a natural live coding environment. Furthermore, the ChucK Motion application programming interface is offered for the development of ChucK programs utilizing the location and orientation sensing functions of tablet devices.

Overall, these capabilities provide a new and interesting platform for further musical programming explorations. However, in developing and experimenting with miniAudicle for iPad, the author held a lingering feeling that a touch-based programming system centered around text is fundamentally flawed. It was necessary to adapt the prevailing text programming paradigm to this newer environment, at the very least, to serve as a baseline for further research. But to truly engage the interactions made possible by mobile touchscreen technology, a new system, holistically designed for the medium, would need to be created. One such system, Auraglyph, will be discussed in the next chapter.

References

Copyright (c) 2017, 2023 by Spencer Salazar. All rights reserved.