6. Conclusion

The best way to predict the future is to invent it.

Alan Kay

This dissertation has described the context, design, implementation, and evaluation of two systems for developing music software using mobile touch devices. The general approach to developing these systems was to consider what design features are actually appropriate for the touchscreen-based mobile technology medium. We believe this strategy applies equally to the mobile touch technology as it does to whichever category of interaction is next to become widespread, be it virtual reality, augmented reality, or something yet unknown. The future of creative technology is not predestined; as its necessary inventors we are free to break down its conventions and build them anew.

The development of these systems has followed a general design framework for creating interactive mobile touch software. Some components of this framework were considered explicitly in the development of miniAudicle for iPad and Auraglyph whereas some were implicit; some have been carried over from the author’s and his colleagues’ previous work and some have only been realized in the implementation of the original research described in this dissertation. In the remaining pages, we will attempt to distill this framework into a form applicable to future work stemming from these research efforts and, we hope, applicable to the work of others.

A Framework for Mobile Interaction

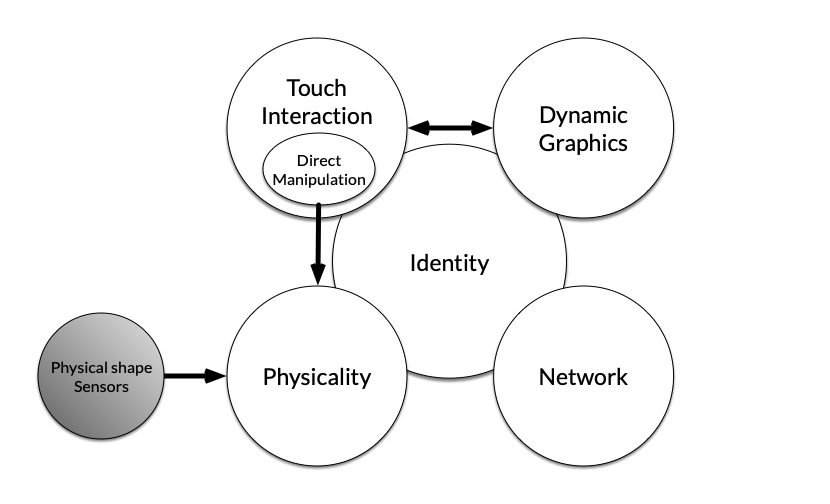

In our understanding, mobile interaction is a synthesis of touchscreen interaction and its immediate consequent direct manipulation, dynamic graphics, physicality, networked communication, and identity (Figure 1.1).

as we use the term here is defined as a system in which a user interacts with software application through the same screen presenting the application’s interface using various touch-based gestures. These include simple tapping, holding a finger, and swiping a pressed finger in a direction or some more complex path. These gestures can be further compounded by sequencing them in time (e.g., a double-tap) or by coordinating gestures from multiple fingers. Gestures are naturally continuous, but may also be discretized if appropriate to the action that results (for instance, swiping to turn a page or double-tapping to zoom in to a zoomable interface).

From a technical perspective, touch interaction introduces unique challenges. Touch positions must be managed and analyzed over time, and differences between two distinct gestures must generally be assessed according to heuristics that require tuning. For instance, analyzing a “swipe” gesture requires ascertaining how far an individual touch must travel to be considered a swipe and how much perpendicular finger movement to tolerate in the swipe. Software implementing gestures must be able to distinguish between multiple similar gestures and correctly distribute gestures that could have more than one possible target.

As a whole, touch gestures readily lend themselves to basic desktop interaction metaphors, such as clicking buttons or hyperlinks. However, a powerful class of metaphor enabled by touch interaction is , in which an on-screen object is designed to represent some real-world object and may be manipulated and interacted with as such. Early touchscreen phone applications took such metaphors to garish extremes, including Koi Pond, creating a virtual pond within its user’s phone with fish they can poke, and Smule Sonic Lighter [2], an on-screen lighter which was flicked to light, would “burn” the edges of the screen if tilted, and could even be extinguished by blowing into the phone’s microphone.

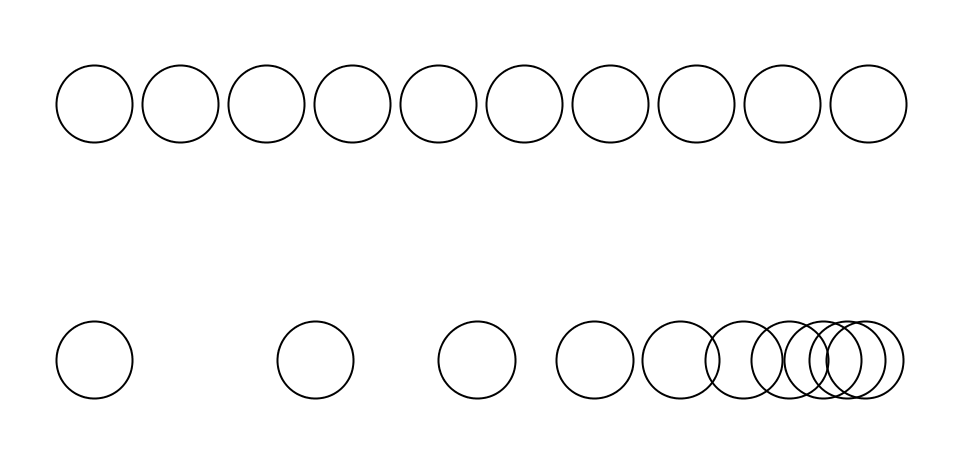

Touch interaction and direct manipulation both depend on . Dynamic graphics can be thought of as a considered pairing of computer graphics per se and animation, guided by principles of physical systems. Interactive objects can have momentum, resistance, or attractions and repulsions, and they can grow and shrink or fade in and fade out, both in response to user input and of their own accord. The exact properties are not so important as the overall effect of liveness and the suggestion that one might be interacting with a real object rather than a computer representation. While this can be achieved with full physics engines and constraint solvers, often it is sufficient to animate the motion of objects with simple exponential curves, an effective simulation of a restoring force such as a spring or rubber band (Figure 1.2). On the other hand, pure linear motion is stilted and robotic, easily exposed as the movement of a machine.

Direct manipulation also supports , the third component of our mobile interaction framework. Physicality is most influenced, however, by the physical shape of the device itself. In fundamentally different ways from conventional computers, mobile devices can be easily picked up, gestured with, thrown, turned upside-down, stored in a pocket, and/or transported. They often do experience many of these interactions on a daily basis; their very utility is dependent on the ability to do so. The supplementary sensor interfaces of a device further contribute to the physicality of a device. Most mobile devices are outfitted with an array of inertial and spatial sensors, allowing software to determine its location on Earth, its orientation, and its direction. These capabilities further integrate the mobile device into its user’s environment and physical presence.

is integral to our mobile interaction framework, though it is not directly related to the others. Mobile devices, and especially mobile phones, are communication tools, first and foremost. In addition to their primary functionality as a telephone, mobile phones have developed an assortment of additional channels for text, voice, and video communication. Cloud computing trends have begun to view mobile devices as nodes in the network lattice, constantly synchronizing their data to and from a central network store. In this sense, it is easy to think of mobile technology as being fundamentally inseparable from the network.

From this perspective, the software applications described in this dissertation are flawed by not fundamentally engaging in networked interactions. One can easily imagine being able to synchronize control and timing information and even audio data between multiple Auraglyph performers either through the local network or over the global internet. The power of network in mobile interaction is best exemplified by, for example, Ocarina. The networking component of Ocarina in fact completes the experience by drawing its users in to a shared musical world. The fluid sharing of musical performances leverages the technology’s greatest advantage over a traditional instrument, the power to instantly communicate (musically) with the entire world.

in a sense unites all of the parts of this framework and is also the most ephemeral of them. Identity asks creators of creative mobile software to consider their user’s relationship to the device itself. A mobile phone is a multi-purpose device ultimately linked to communication and relationships. A designer of mobile phone software is forced to consider that, at any moment, their software might be forcibly interrupted by a FaceTime call from the user’s significant other, boss, parents, or child. A tablet is liable to be used lying on the couch, or reading in the park, or watching Netflix in bed. These functions of the technology are not inseparable from the hardware itself, and the user’s relationship with the device will affect their perception of software the runs on it. Mobile software design must take these factors into consideration.

Future Work

The completion of this research has prompted two primary goals for the near future. The first of these is to further develop an extended musical work using Auraglyph, continuing the author’s compositional endeavors described in Chapter [chapter:Evaluation]. We firmly maintain that the most important appraisal of new music technologies is to actually make music with it. Development of the author’s performance practice, and hopefully that of others, will offer a continuing assessment of the ideas contained herein.

To this end, the second short term goal for this research is to prepare miniAudicle for iPad and Auraglyph for release to the general public. In terms of reliability and required feature set, there is a considerable difference between software that is utilized and tested in a controlled environment and software that is distributed to many unknown users across the globe. While this gap has been closed somewhat, some essential pieces remain to be completed; these include additional node types, undo/copy/paste operations, and platform integration features like Inter-App Audio and Ableton Link, which have become expected of non-trivial mobile audio software. Support features like a website, example files, documentation, and demonstration and tutorial videos must be created to soften the learning curve of both applications and market them effectively.

A variety of additional sound design and compositional interactions

would benefit Auraglyph. We would like to enhance the mathematical

processing capabilities of Auraglyph with the option to write a

mathematical equation and have it be applied to audio or control

signals. The ability to directly write conventional Western notation,

freeform graphical scores, and structures for long-term musical form

would supplement Auraglyph’s compositional toolset. Adding tools to work

with natural input—for instance, audio analysis and video input and

analysis—would also expand the compositional possibilities afforded to

users. Finishing the Composite node will enable users to

build modules out of networks of nodes and facilitate code reuse. The

addition of nodes for rendering custom graphics will also complement the

expressive capabilities and visual distinctiveness of Auraglyph.

There are interesting questions related to networking for both Auraglyph and miniAudicle for iPad. Network synchronization of tempo was explicitly asked for by Auraglyph users; its clear that local group performances could benefit from sharing timing information as well as general control data (for instance, pitches, keys, or frequencies) and raw audio waveforms. Distributing these collaborative audio programming interactions over a wide area network presents interesting challenges both in terms of design and implementation. Latency is always a concern when sharing real-time control or audio data over a wide area network; telematic performance practice has explored compositional approaches to managing latency, but these solutions are not always suitable for a given genre of music. Another possibility is to synchronize just the program structures over the network, with a single global program definition shared between programmers that generates parallel control and audio information on each system. Asynchronous models for collaborative programming are also worth exploring, in which a program can be built by multiple users over a longer period of time. Also needing research attention is how collaborative music programmers might find each other in a wide area networked framework; whether participants would need to coordinate with each other in advance, if there would be some sort of matchmaking system, or some other method of discovering other users with complementary musical aesthetics.

Auraglyph’s handwriting recognition system is fairly inflexible, being based on a software library designed for machine learning research and not designed for iOS. It would be ideal to switch to a simpler handwriting recognition system, such as the $1 Stroke Recognizer [3]. A simpler system would assist the creation of new gesture sets, like musical notes, mathematical symbols, or the Roman alphabet, enabling further research into the integration of handwritten input into coding systems.

The original proposal for this dissertation included a concept for a supplementary programming language called Glyph; time constraints and design difficulties inhibited progress on this idea. This language would mix textual and visual paradigms in a system of hand-drawn text tokens interconnected either by proximity or drawn graphical structures. These ideas merit further exploration to improve Auraglyph’s handling of common programming tasks like loops and functional programming.

Further exploring the role of physical objects and writing tools in Auraglyph’s sketching paradigm suggests interesting avenues for research. Physical knobs, discrete tokens such as those used by the reacTable, or other musical controls placed on-screen present interesting possibilities to complement stylus and touch interaction. The development of other implements, such as an eraser or a paint brush, could facilitate additional classes of gestures and expand the physical/virtual metaphor underlying Auraglyph.

miniAudicle for iPad can also benefit from further research and development efforts. Supplementing the text editing features to move further away from dependence on a virtual keyboard could possibly lead to greater support for expressive ChucK coding on the iPad. Such features might include machine learning-based text input prediction, or palettes of standard code templates like while loops, input handling, or control-rate audio modulation. Research on additional programming capabilities like user interface design and three-dimensional graphics have already begun, as has development of functionality to share ChucK code over the network with other miniAudicle users and to the general web audience [1].

We are actively interested how the concepts and methods described herein might be applied to new interaction media, such as virtual reality or augmented reality. Programmers might use their hands to connect programming nodes in a virtual space that can be managed at a high level of abstraction and then zoomed in to examine low level details. A virtual environment might also support networked, collaborative programming with other users around the world. An augmented reality system derived from these ideas might allow programmers to build programming structures that interact with their environment, co-located in space and interacting with elements of the real world.

Final Remarks

The desire to utilize interactions unique to mobile touchscreen technology for music programming has led to the development of two new software environments for music computing. miniAudicle for iPad brings ChucK to a tablet form factor, enabling new kinds of interactions based on the familiar textual programming paradigm. Contrasting with this initial effort, we propose a new model for music computing combining touch and handwritten input. A software application called Auraglyph has been developed to embody one conception of this model. A series of studies of the use of these systems have given these ideas validation, while also revealing additional related concerns such as reliability and forgiveness. When these software applications have reached a reasonable level of functionality and reliability expected of publicly available products, miniAudicle for iPad will be released via https://ccrma.stanford.edu/~spencer/mini-ipad and Auraglyph will be released via its website, https://auragly.ph/.

References

Copyright (c) 2017, 2023 by Spencer Salazar. All rights reserved.