1. Introduction

There are no theoretical limitations to the performance of the computer as a source of musical sounds, in contrast to the performance of ordinary instruments. At present, the range of computer music is limited principally by cost and by our knowledge of psychoacoustics. These limits are rapidly receding.

M. V. Mathews, The Digital Computer as a Musical Instrument (1963) [1]

With this resounding pronouncement Max Mathews kickstarted the era of digitally synthesized computer music. From that point forward it has been understood that computers can produce any sound that humans are capable of hearing, given sufficient computing resources. For some time these resources have been widely available on consumer desktop and laptop computers, and, in recent years, on phones and a panoply of other mobile devices. Given an array of computing devices that can produce any sound, the objective of the computer musician then becomes what sounds are worth creating. From classical subtractive and additive synthesis methods to modern physical modeling techniques, the history of computer music can be viewed as a succession of constraints placed on this infinite space of possibilities. In exchange, these constraints afford computer musicians higher-level abstractions for thinking about and creating music.

To this end, much attention has been devoted to the development of programming frameworks for audio synthesis, processing, and analysis. Early music programming languages introduced the unit generator design pattern; later developments have allowed graphical programming of musical and audio processing primitives; more recent systems have offered tools for parallelization and strong timing of musical events. Each such framework establishes semantics for thinking about digital music; these semantics may limit what is easy to do in any given language, but provide the necessary support for higher-level reasoning about design and composition.

Also of critical importance to the computer musician is the role of interaction. Initially digital music creation involved limited interactivity, as composers would develop an entire music software program and then wait for some time, often overnight, for the computer to generate an audible result. This is an abrupt contrast to pre-electronic music production, by way of acoustic musical instruments; sound can be issued immediately, but dexterity, physical exertion, and extensive training are required for a performer to control the sound in any structured way. Over the years, available computing power has increased, costs have decreased, and physical size has diminished, allowing a diversity of approaches for interactive computer music. Some of these apply human-computer interaction research to the musical domain, employing general-purpose computing interfaces like mice, keyboards, pen tablets, and gaming controllers to design and enact musical events. Related to this, the developing live-coding movement has positioned the act of programming itself as a musical performance (Figure 1.1). Other efforts seek to update acoustic instruments with digital technology, complementing these with sensors and actuators mediated by a computer. Many interactive computer music systems apply both approaches. Crucially, this research and design of interactivity has been necessary to create structures and meanings for computer music. Interactive software is our means to explore the infinite variety of sounds made available to us by the computer.

Most recently, touchscreen interfaces and mobile devices have profoundly impacted the landscape of mainstream human computer interaction in the early 21st century. In the past decade, scores of mobile touchscreen devices have been cemented in the popular consciousness – mobile phones, tablet computers, smart watches, and desktop computer screens, to name a few. For many individuals, mobile touch-based devices are increasingly the primary interface for computing. It is conceivable this trend will only accelerate as computing technology becomes even more naturally integrated into daily life. New human-computer interaction paradigms have accompanied these hardware developments, addressing the complex shift from classical keyboard-and-mouse computing to multitouch interaction.

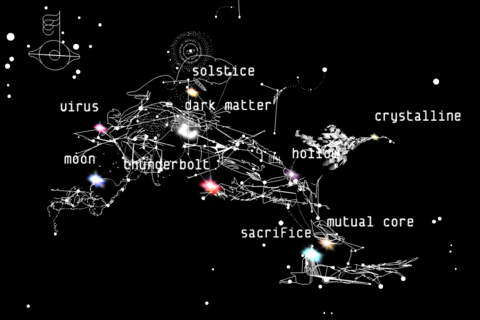

These new technologies and interaction models have seen a number of interesting uses in musical interaction, including the software applications of Smule, like Ocarina and Magic Piano (Figure 1.2), and artist-focused apps like Björk’s Biophilia (Figure 1.4) and Radiohead’s Polyfauna (Figure 1.5). However, touchscreen-based mobile devices have found meager application in programming of music software, despite recent innovations in interactive musical programming on desktop/mouse-and-keyboard systems. This overlooked use of touchscreen and mobile interaction presents unique and compelling opportunities for musical programming. Touchscreen interaction offers a natural medium for expressive music programming, composition, and design by providing intrinsic gestural control and interactive feedback, two fundamental processes of musical practice. This dissertation explores these ideas through the design, implementation, and evaluation of two concepts for music programming and performance on mobile, touchscreen interfaces. Through this exploration, we have developed a novel framework for sound design and music composition using exclusively touch and handwriting input.

Motivation

This work is motivated by the desire to better understand the distinguishing capabilities and limitations of touchscreen technology, and, using these as guiding principles, to empower musical programming, composition, and design on such devices. Complex software developed for a given interaction model — such as keyboard-and-mouse — may not successfully cross over to a different interaction model — such as a touchscreen device. As these technologies have become widespread, it is not sufficient to sit back and watch inappropriate, preexisting interaction models be forced into the mobile touchscreen metaphor. Rather, it is incumbent upon the research community to explore how best to leverage the unique assets and drawbacks of this new paradigm, which is evidently here to stay. Similar trends might be seen in the shift in computer music from mainframes and dedicated synthesizers to the personal computer in the 1980s, and then to the laptop, now ubiquitous in modern computer music performance practice. As these computing paradigms gave way from one to another, the software tools and interaction metaphors adjusted to better take advantage of the dominant paradigm. Therefore, our overriding design philosophy in researching these systems is not to transplant a desktop software development environment to a tablet, but to consider what interactions the tablet might best provide for us.

We believe the critical properties of touchscreen interaction in musical creation are its support for greater gestural control, direct manipulation of on-screen structures, and potential for tight feedback between action and effect. Gesture and physicality is of primary importance in music creation; paradoxically, the power of computer music to obviate gesture is perhaps its most glaring flaw. Keyboard-and-mouse computing does little to remedy this condition. From a musical perspective, keys are a row of on-off switches with limited degree of gestural interaction, and the conventional use of the mouse is a disembodied pointer drifting in virtual space. As described in Background: Mobile and Touch Computing, a rich history of laptop instrument design has sought to embrace these disadvantages as assets, with fascinating results. Yet gesture and physicality is the essence of interaction with mobile touchscreen devices. Touchscreens enable complex input with one or more fingers of one or both hands. They allow their users to directly touch and interact with the virtual world they portray. A mobile device can be picked up, waved, dropped, flung, flipped, gesticulated, attached, put in a pocket, spoken, sung, or blown into, and/or carried short or long distances. Moreover, these and other activities can be detected by the sensors of the device and interpreted by software. So it is natural to ask how these capabilities might be applied to musical creativity.

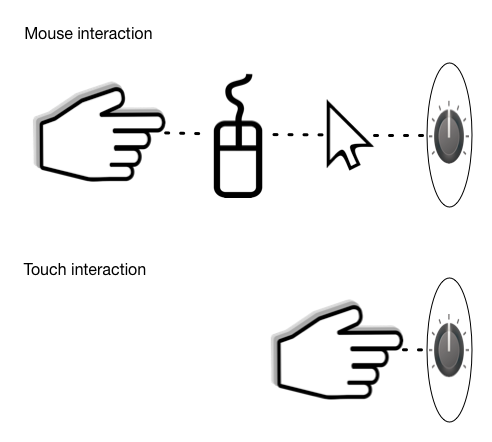

Direct manipulation allows users of creative software to interact directly with objects on-screen, narrowing the divide between physical reality and digital abstraction. While this can be used to augment simple physical metaphors, like the knobs and sliders popular in musical applications, it can also facilitate richer interactions beyond what is possible in the physical realm. This contrasts with conventional desktop computer interaction, in which the mouse cursor distances the user from the activities that occur in the underlying virtual space. Figure 1.6 visually demonstrates these comparative distances. This naturalistic form of interaction greater leverages its users’ preexisting intuitions about the world. The resulting intuitiveness not only makes it easier for novices to learn a new set of interactions, but makes simple actions require less thinking for experienced users, allowing more sophisticated interactions to be built on this intuitive foundation.

Touch and direct manipulation further enable tight feedback between action and effect. As an object is manipulated on-screen, it can quickly react visually to the changes being made to it. In a system of interacting components, the result of changing one component can be further reflected in the overall system. This dynamic, immediate feedback into the state of a virtual system allows users to quickly see the connections between inputs and outputs, facilitating understanding of the overall system. This is especially advantageous in musical systems, in which a “try-and-see” approach to design and composition is typical and a musician might explore numerous small variations on a single idea. Touch interaction is not a prerequisite for this dynamic feedback, but the shorter distance between the user and the virtual structures on screen augments its utility.

This dissertation also examines the role of stylus-based interaction in mobile touchscreen devices. Stylus interaction can allow for user input of higher precision than typically possible with touch input, such as drawing symbols, waveforms, and other shapes. By placing an implement between the user and the virtual world, stylus input distances the user somewhat from the activity on screen. However, writing on a flat virtual surface is still fairly immediate, and touch input remains available for interactions where higher levels of directness is desirable in exchange for comparably lower levels of precision.

Of course, mobile touch interaction is not without its weaknesses. The most obvious of these in conventional systems is the lack of tactile feedback. When someone types a key or clicks a mouse, their finger experiences a brief physical sensation indicating that they key or click was registered. Keyboard users with reasonable training can feel if their finger is fully covering a single key or erroneously covering multiple keys, and fix the error before ultimately pressing the key. Contemporary touchscreens are generally unable to provide such physical cues as to the success of an impending or transpiring gesture. Rather, touch systems must rely on visual or aural feedback for these cues. This lack of tactile feedback complicates interaction with systems with discrete inputs. For instance, most commonly available touchscreen systems cannot simulate the clicking detents of a rotary knob, or the click of a keyboard. Creative musical systems using touch interaction must acknowledge these limitations in their design.

Together, these characteristics of touchscreens are a compelling framework for working with music in diverse and artistically meaningful ways. The research herein examines how these advantages and weaknesses might be used to create and understand music computationally.

Roadmap

In this dissertation we explore new models for programming musical systems. Firstly, we discuss previous research in areas related to the present research. These subjects include systems for interactive music programming and live coding, mobile and touch computing in music, and the applications of handwriting input to music and computing.

Following this, we examine the place of textual programming in touchscreen interaction, in part by developing a programming and performance environment for the ChucK language on the Apple iPad, called miniAudicle for iPad. Textual programming on touchscreen devices has been largely overlooked for music software development; it is easy to dismiss due to the imprecision of keyboard-based text input in such an interface. Nonetheless we believe that exploring the augmentation of text programming with touch interaction is worth considering as an initial approach to music software development.

Next, we introduce a new and drastically different programming paradigm in which touch manipulation is augmented with stylus-based handwriting input. In combining stylus and touch interaction, the system we have developed provides a number of advantages over existing touchscreen paradigms for music. Handwritten input by way of a stylus, complemented by modern digital handwriting recognition techniques, replaces the traditional role of keyboard-based text/numeric entry with handwritten letters and numerals. In this way, handwritten gestures can both set alphanumeric parameters and write out higher level constructs, such as programming code or musical notation. A stylus allows for modal entry of generic shapes and glyphs, e.g. canonical oscillator patterns (sine wave, sawtooth wave, square wave, etc.) or other abstract symbols. Furthermore, the stylus provides precise graphical free-form input for data such as filter transfer functions, envelopes, parameter control curves, and musical notes. In this system, multitouch finger input continues to provide functionality that has become expected of touch-based software, such as direct movement of on-screen objects, interaction with conventional controls (sliders, buttons, etc.), and other manipulations. Herein we discuss the design, development, and evaluation of Auraglyph, a system created according to these concepts.

We then present an evaluation of these two systems, miniAudicle for iPad and Auraglyph, and the conceptual foundations underlying them. This evaluation uses two approaches. The first is a set of user studies in which a number of individuals used miniAudicle for iPad or Auraglyph to create music. The second is a discussion of the author’s personal experiences with using the software described, including technical exercises and compositional explorations.

Lastly, this dissertation concludes by offering a framework for designing creative mobile software. This framework has been developed based on the experiences and results described herein as well as existing research in this area. It comprises direct manipulation, dynamic graphics, physicality, network, and identity. We intend to develop and evaluate further research of this nature with this framework in mind, and hope that other researchers and music technologists will find it useful in their endeavors.

Contributions

This dissertation offers the following contributions.

Two drastically different implementations of gestural interactive systems for music programming and composition. These are miniAudicle for iPad, a software application for programming music on tablet devices using the ChucK programming language, and Auraglyph, a software application for sound design and music composition using a new language, based on handwritten gesture interaction.

A series of evaluations of these ideas and their implementations, judging their merit for music creation and computer music education. These evaluations have shown that gestural-based music programming systems for mobile touchscreen devices, when executed effectively, provide distinct advantages over existing desktop-based systems in these areas.

A conceptual framework for gestural interactive musical software on touchscreen devices, consisting of direct manipulation, dynamic graphics, physicality, network, and identity.

References

Copyright (c) 2017, 2023 by Spencer Salazar. All rights reserved.