2. Background

Systems for Interactive Music Programming and Live Coding

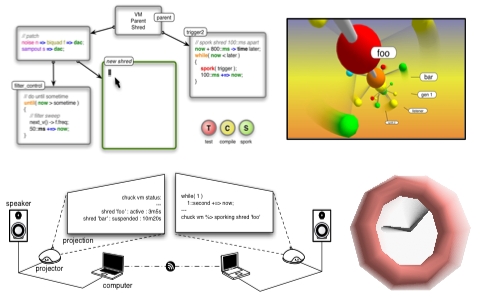

Much of this work draws from desktop computer-based environments for music programming, including miniAudicle [61] and the Audicle [89], two programming environments for the ChucK music programming language [80]. ChucK is a text-based music programming language that includes fundamental, language-level primitives for precise timing, concurrency, and synchronization of musical and sonic events. These features specifically equip the language to deal with both high-level musical considerations such as duration, rhythm, and counterpoint, in addition to low-level sound design concerns like modulation and delay timings. ChucK also leverages a diverse palette of built-in sounds, including unit generators designed specifically for the language and a variety of modules from the Synthesis ToolKit [14].

The Audicle provides a number of facilities for real-time graphical introspection and debugging of ChucK code (Figure 1.1). One of the primary design goals of the Audicle was to provide sophisticated “visualizations of system state” to assist the programmer in reasoning about their programs. It achieved this by spreading multiple perspectives of running code across several virtual “faces.” These included a face to visualize the syntax tree of compiled ChucK code, the links between concurrently running programs, and the timing of each running program. The CoAudicle extended musical live-coding to interactive network-enhanced performance between multiple performers [81]. miniAudicle (Figure 1.2) slimmed down the Audicle in terms of design and functionality to provide a general-purpose, cross-platform, and light-weight live coding environment for ChucK programming.

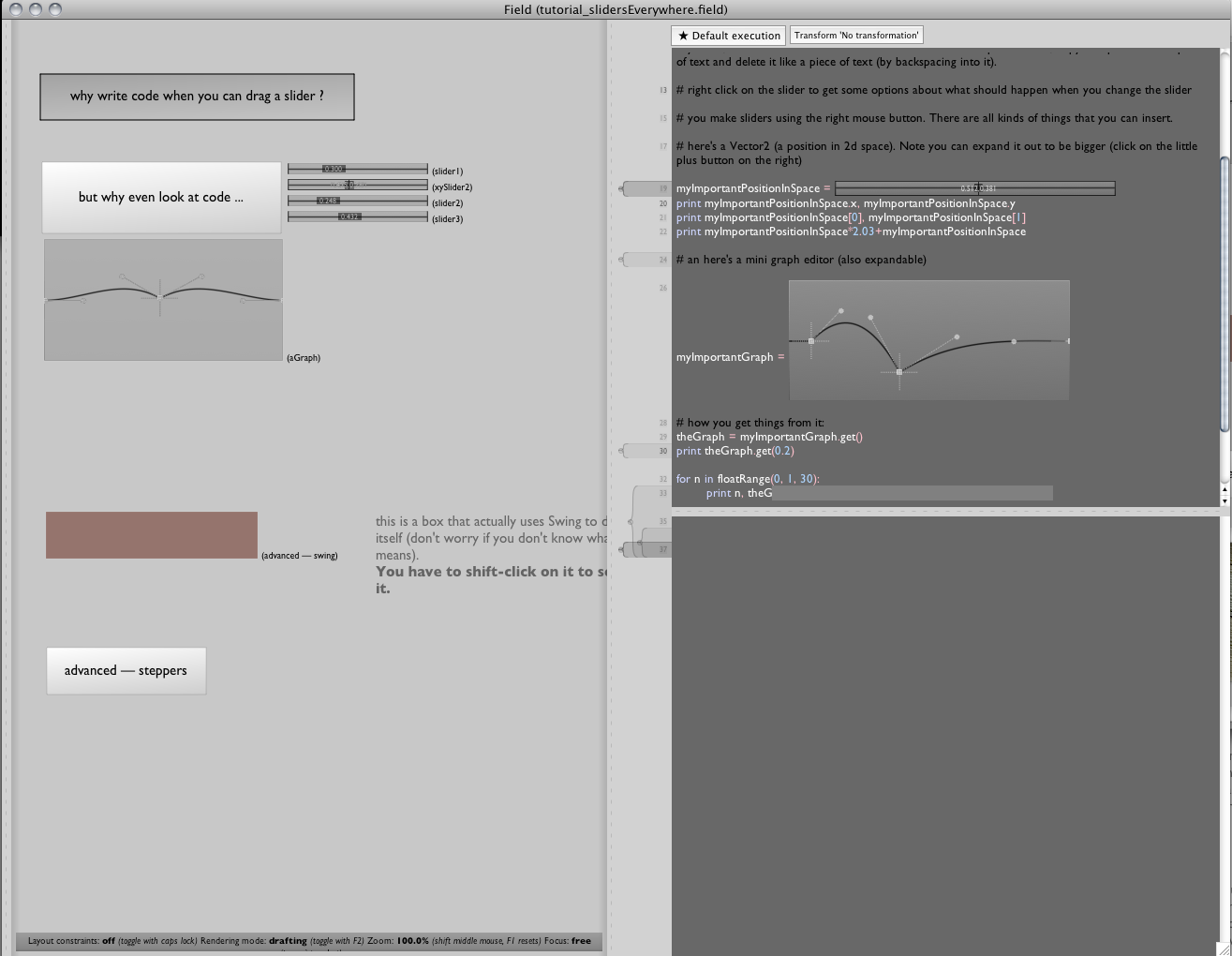

Impromptu [70] and SuperCollider [45] are both audio programming languages whose standard coding environments were built around interactive coding. Each of these systems uses an interactive code editing interface, enabling live coding of music in a performative setting [12]. More recent developments in live-coding systems have lead to ixi lang, which complements SuperCollider with a domain-specific language designed for live-coding [42], Overtone, which uses the Clojure programming language to control SuperCollider’s core synthesis engine [1], and Sonic Pi, a Ruby-descended language and environment for musical live coding [2]. The Field programming language [19], designed for creating audiovisual works, features a number of enhancements to textual programming conventions (Figure 1.3). Notably, graphical user interface elements such as sliders, buttons, and graphs can be inserted and modified directly in code. Code in Field can further be arranged in a graphical window, called the “canvas,” and be subjected to a variety of interactive graphical manipulations.

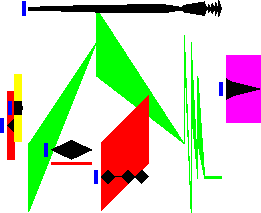

The object graph-based programming languages Pure Data (Pd) [57] and

Max [95]

(Figure 1.4) have tremendously influenced the

space of visual computer music design and composition and represent the

evolving status quo of graphical music programming. Programming in Pd or

Max consists of creating objects and other nodes in a graph structure,

in the computer science sense of the term, that is displayed on screen;

connecting these nodes in various arrangements forms computational

logic. These connections proceed at either control rate or audio rate

depending on the identity of the object and the specification of its

input and output terminals—audio-rate objects are allowed to have

control-rate terminals, and audio-rate terminals may in some cases

accept control-rate input. In delineating the underlying design choices

made in the development of Max, Puckette candidly describes its

advantages and limitations [56], and, to the extent that they are

comparable, those of its counterpart Pure Data. Puckette expresses an

ideal in which each constituent node of a patch displays its entire

state in text format. For instance, cycle~ 100 expresses a

sine wave at 100 Hz, or lores~ 400 0.9 expresses a resonant

lowpass filter with a cutoff frequency of 400 and filter Q of 0.9. These

parameters are explicitly displayed in the graphical canvas, rather than

hidden behind separate editor windows. In a sense, one can easily scan a

Max document window and understand quickly the nature of the entire

program, or print the program and be able to accurately recreate most of

its parts.

This clarity of design is absent in other aspects of Pd and Max,

however. In both languages, the identity and order of an object’s

arguments must generally be known beforehand to set them in a newly

created object. The identity and function of each object’s inlets and

outlets are also not displayed. Furthermore, the exact function of an

inlet can vary depending on the content of its input, requiring the user

to simply memorize this information or look it up in the documentation.

In one common example, the soundfiler in Pd accepts

multiple “flags” as the first item in a list of inputs; the value of

this flag determines how the rest of the inputs are interpreted (for

instance, “read” will read the specified sound file to a specified

buffer or “write” to write one). This is a convenient way to multiplex

sophisticated functionality into a single object, but can also obscure

program logic as the nature of any particular object input is not clear

based on visual inspection. More recent versions of Max have improved on

these issues by assertively displaying contextual documentation. The

design of arrays and tables in Pd or buffers in Max also presents

roadblocks to easily understanding the greater functionality of a whole

program. In both languages, the array/table or buffer must be declared

and named in an isolated object, adding it to a global namespace of

audio buffers. It can then be read or modified by other objects by

referring to it by name. By separating the definition of these buffers

from the control flow of their use and managing them in a global

namespace, high-level program logic is allowed to be spread out among

disparate points in the program.

One of the primary motivations of developing Pure Data was to introduce a mechanism for open-ended user-defined musical scores [58]. As implemented, this consists of a mechanism for defining hierarchies of structured data and a graphical representation of these structures (Figure 1.5). Pd enables these data structures to be manipulated programmatically or by using a graphical editor.

Several efforts have extended Pd and Max’s programming model to the synthesis of real-time graphics. The Graphics Environment for Multimedia (GEM), originally created for Max but later integrated into Pd, is an OpenGL-based graphics rendering system [15, 16]. Graphics commands are assembled using Pd objects corresponding to geometry definition, properties of geometries, and pixel-based operations, including image and video processing. Jitter1 is a Max extension with tools for handling real-time video, two- and three-dimensional rendered graphics, and video effects.

Reaktor2 is a graphical patching system for developing sound processing programs (Figure 1.6). Despite a superficial resemblance to Max and Pure Data, Reaktor does not position itself as a programming language per-se. Instead, it is presented as a digital incarnation of analog modular synthesis with virtual modules and patch cables on-screen. Reaktor’s design makes a fundamental distinction between the interface and implementation of software developed using it. This separation allows end-users of a Reaktor program to interact with the software’s high-level interface while avoiding low-level details. A similar concept is embodied in Max’s Presentation mode, which hides non-interactive objects and patches, leaving only the interactive parts of the program.

Kyma is a graphical audio programming and sound design system also based on interconnecting a modular set of sound processing nodes [63, 64]. Kyma was designed to sustain both sound design and musical composition within the same programming system, forgoing the orchestra/score model common at the time. In addition to a dataflow perspective of sound, Kyma provides additional timeline, waveform, and mixing console views of sound creation.

The SynthEdit program for the NeXT Computer allowed users to graphically build unit generator topologies by selecting individual components from a palette and connecting them using the mouse cursor [48]. Unit generators could be parameterized through an inspector window. Developed from the NeXT computer’s basic drawing program, SynthEdit also allowed users to freely draw on the panel containing the audio components [69].

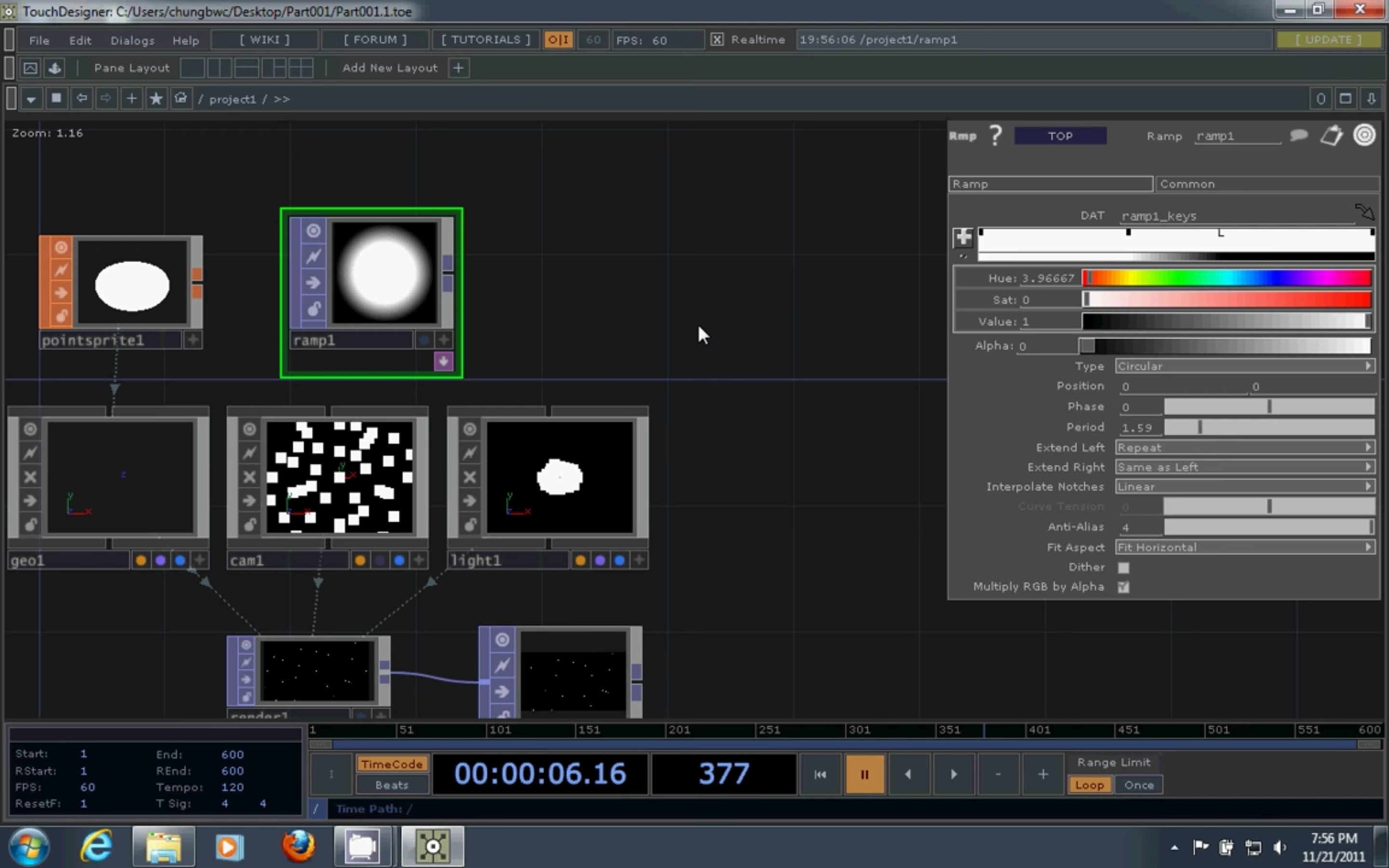

Derivative TouchDesigner3 is a graphical dataflow programming language for computer graphics and sound (Figure 1.7). A TouchDesigner program consists of a graph of objects that process sound, video, or geometry data in various ways. Its design and user feedback is somewhat antithetical to that of Pure Data and Max, in that the properties of each node can only be viewed or edited through a dialog that is normally hidden, but each node clearly displays the result of its processing in its graphical presentation. Rather than displaying the definition of any given node, TouchDesigner shows that node’s effect. As a result, it is straightforward to reason about how each part of a TouchDesigner program is affecting the final output.

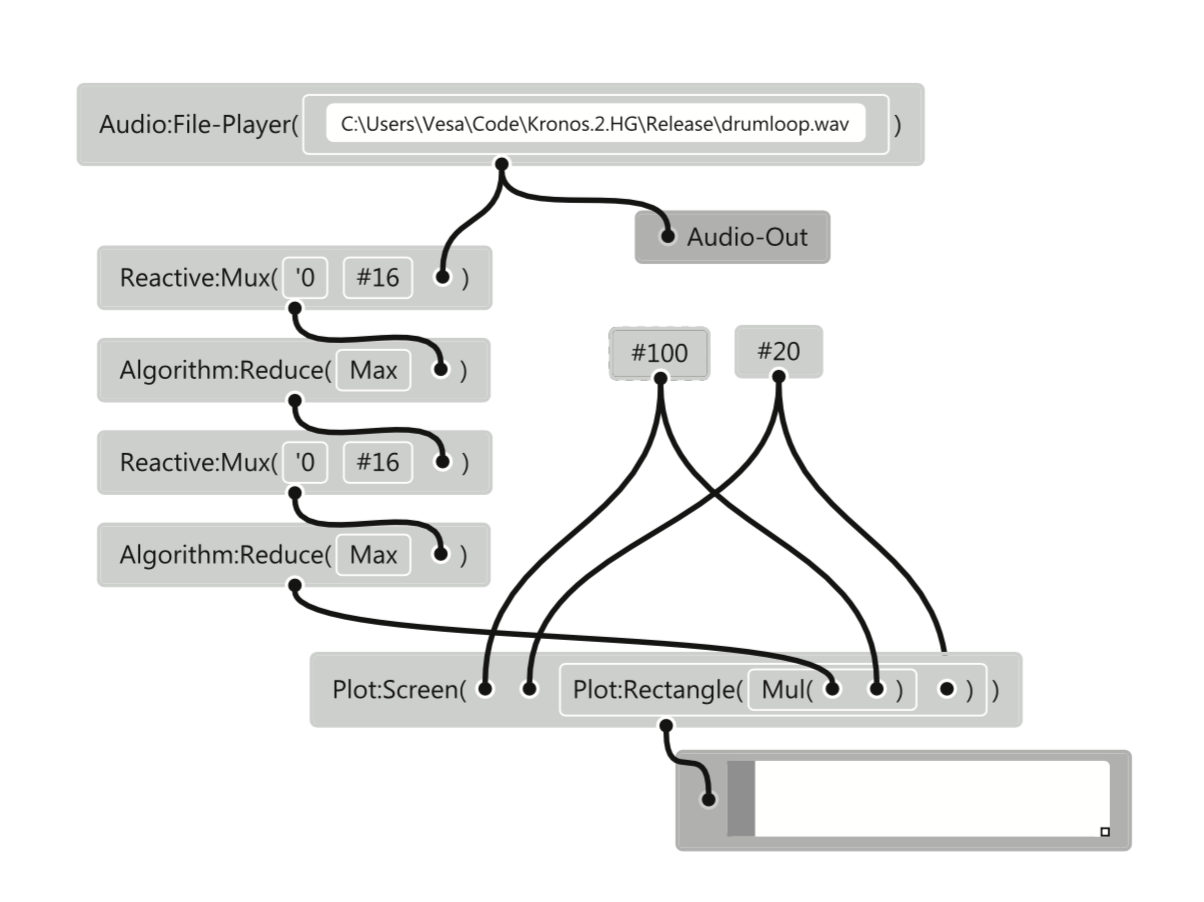

More recently, the Kronos programming language extends functional programming models to a visual space in the context of real-time music synthesis [53]. In Kronos, a programmer directly edits the syntax tree representation of a program; inputs to functions are routed from literal values, other function outputs, or function objects themselves. These functions are capable of operating both on individual data items or on full audio or graphical signals, allowing for a rich synergy between computation, synthesis, and visualization (Figure 1.8).

There exist numerous graphical programming environments specific to domains other than music and audio synthesis. National Instruments’ LabVIEW4 is a graphical programming system for scientific, engineering, and industrial applications such as data acquisition, control systems design, and automation. MathWorks’ Simulink5 allows its programmers to simulate complex interacting industrial and scientific systems by creating connections between the individual components of a block diagram. Apple’s Quartz Composer6 and vvvv7 are both dataflow-oriented graphical programming languages for creating fixed or interactive multimedia compositions; vvvv additionally includes functionality for integrating textual programming, physical computing, computer vision, and projection mapping.

Mobile and Touch Computing

Two significant contemporary trends in mainstream computing—the increasing prevalence of mobile computing devices, and the proliferation of touch as a primary computer interface—have vastly expanded the potential of music technology practitioners and researchers. A number of software applications from mobile application developer Smule have begun to delineate the space of possibilities for mobile music computing [85].

The iPhone application Ocarina is both a musical instrument, designed uniquely around the iPhone’s interaction capabilities, and a musical/social experience, in which performers tune in to each others’ musical renditions around the world [83, 84] (Figure 1.9). The design of Ocarina explicitly leveraged the user input and output affordances of its host device, and avoided design choices which might have been obvious, or have been suitable for other devices, but a poor fit for a mobile phone platform. Rather than “taking a larger instrument (e.g., a guitar or a piano) and ‘shrinking’ the experience into a small mobile device, [Wang] started with a small and simple instrument, the ocarina, [...] and fleshed it out onto the form factor of the iPhone” [83]. In fact, Ocarina used nearly every input and output on the iPhone, including the microphone, touchscreen, the primary speaker, geolocation, WiFi/cellular networking, and the orientation sensors. To use the instrument component of Ocarina, a user would blow into the microphone on the bottom of the device. This input signal, effectively noise, was analyzed by an envelope follower, and used as a continuous control of amplitude. Pitch was controlled using four on-screen “tone-holes,” or buttons; as with an acoustic ocarina, each combination of pressed buttons produced a different pitch. To enable a degree of virtuosity, tilting the device introduces vibrato. The resulting audio output is a synthesized flute-like sound, and performance of the instrument both sonically and gesturally resembles playing an acoustic ocarina.

In addition to a self-contained instrument, Ocarina provides a concise and effective networking and social model. As users play the Ocarina, their performances were recorded and streamed to Smule’s servers, along with a limited amount of metadata including location and a self-chosen username. In a separate mode of the application, users could then listen to these uploaded performances from around the world. The individual musical notes of each performance emanated from the approximate location of its performer, while a gentle glow highlighted the locations of the user base as a whole. This social experience is mediated entirely by music; in contrast to conventional social networks, there is no ability to communicate by text or non-musical means. Critically, the world performances were effectively recordings that were replayed, rather than a live stream–thus evading difficulties related to network latency in live musical interaction. In this way Ocarina created a unique social/music experience.

Leaf Trombone: World Stage took this model a step further, by congregating groups of users into a live American Idol-like panel of judges to critique and rate performances on a mobile phone instrument based on a trombone [87, 88]. As judges listened to a Leaf Trombone performance, they provided commentary in the form of text comments and emoji as the performer watched his or her rendition be pilloried or praised. A final rating of the complete performance would be provided by each judge at the performance’s conclusion.

Magic Fiddle brought these ideas to the iPad, whose larger size accommodates a different set of interactions, such as finer grained pitch control and touch-based amplitude control [82]. Magic Fiddle also imbued a distinctive personality in its software design as the application “spoke” to the user as a sentient being with its own motivations and emotions. According to Wang et al., Magic Fiddle could express a variety of feelings and mental states, seeming variously “intelligent, friendly, and warm,” or “preoccupied, boastful, lonely, or pleased”; the instrument also “probably wants to be your friend.” This sense of personality, part of a larger tutorial mechanism in which the instrument effectively acted as its own teacher, served to draw the user in to the musical experience deeper than they might with an inanimate object.

The conceptual background of these endeavors stems from research in the Princeton Laptop Orchestra [67, 76] (Figure [fig:background:plork]), the Stanford Laptop Orchestra [86], and the Small Musically Expressive Laptop Toolkit [22]. In motivating the creation of the Princeton Laptop Orchestra, the ensemble’s co-founder Dan Trueman considered a number of issues in digital instrument design, including the “sonic presence” of an instrument and the extent to which a performer is engaged with their instrument (so-called “performative attention”) [77]. These notions extend from previous research in instrument design by Trueman and collaborators Curtis Bahn and Perry Cook [4, 75, 78], such as BoSSA, a violin-like instrument outfitted with a sensor-augmented bow and a spherical speaker array. The central pillar of these efforts is a focus on instrument creation as an intricate weaving of digital technology, sound design, gestural interaction design, and physical craft. Cook explicitly argues the importance of mutual interdependence between a physical musical interface and the underlying digital synthesis it controls [13], in contrast to the anything-goes bricolage made possible by an increasing variety of interchangeable MIDI controllers and software synthesizers.

Each of the efforts described above examines the explicit affordances of a technological device as a musical instrument in its own right, rather than as a generic unit of audio computing. They further consider the dynamics of its essential parts and integrate these into the aesthetics of the instrument. This approach ensures a maximally efficient interface between gesture and sound, allowing for both initial accessibility and expressive depth. These principles have deeply influenced the design of the new, touch-based programming tools discussed herein.

The Mobile Phone Orchestra (MoPhO) has applied these techniques in developing a set of compositions and principles for mobile phone-based performance practice [54] (Figure 1.11). One MoPhO composition leveraged the phone’s spatial sensors to detect the direction in which it was being pointed, and as a performer blew into the phone’s microphone, synthetic wind chimes would play from loudspeakers in that direction. Another piece treated a set of phones as ping-pong paddles, using inertial sensing to detect its performers’ swinging motions and bounce a virtual ball around the performance space while sonifying its movement and activity. MoPhO further calls for additional practices to leverage the distinct capabilities of mobile technology, such as “social and geographical elements of performance” and audience participation.

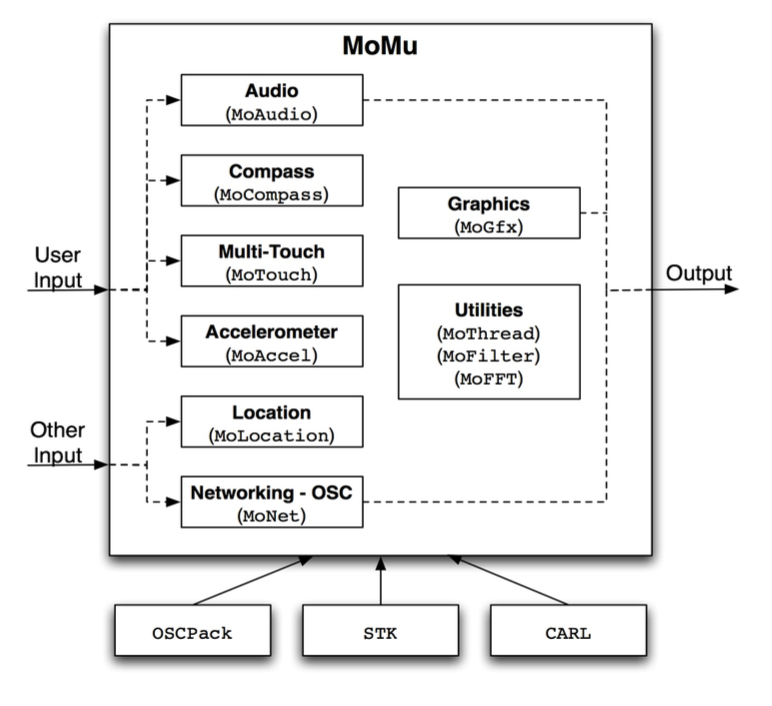

Many of MoPhO’s compositions were built using the MoMu toolkit [7] (Figure 1.12), a programming framework for real-time interactive mobile audio applications. MoMu unifies a variety of interactive technologies prevalent in mobile art and music applications into a single common interface. Among these are 3-D graphics, touch interaction, inertial/spatial sensing, networking, fast Fourier transform, and of course real-time audio. In this sense, it explicitly delineates which technologies its authors have found valuable in realizing interactive mobile music software.

A number of efforts have signaled a desire to streamline the design of musical interactions based on touchscreen and mobile devices. Many of the tools in this space have leveraged the gestural and interactive aspects of mobile and touchscreen technology while leaving with conventional computers the parts that work comparatively well on that platform. TouchOSC8 is a flexible control application that sends Open Sound Control [94] or MIDI messages to an arbitrary client in response to user interaction with a variety of on-screen sliders, rotary knobs, buttons, device orientation, and other controls. TouchOSC comes with a selection of default interface layouts in addition to providing tools to customize new arrangements of controls. In this sense, TouchOSC exports to other software programs the task of mapping the controls to sound (or computer-generated visuals, or any other desired output). This facilitates rapid development of a mobile audio system built using any tool capable of working with OSC or MIDI. However, this typically also binds the system to a desktop or laptop computer and a wireless network for exchanging data with this computer; while it is possible for the OSC/MIDI client to run locally on the mobile device in tandem with TouchOSC, in the author’s experience this is less common. This impairs scenarios where the mobile device might be freely moved to different locations, and as noted by Tarakajian [73] forces the performer to consider low-level networking details like IP addresses and ports. Generally these details are not what musicians want to primarily concern themselves with in the midst of setting up a performance, and in the author’s experience wireless networking is a common point of failure in computer music performance. TouchOSC is also limited to interactions that are compatible with its fixed set of control objects; interactions dependent on providing rich feedback to the user about the system being controlled are not possible with TouchOSC.

Roberts’ Control [59, 60] automatically generates interface items like sliders and buttons on a touchscreen, based on specifications provided by connected desktop software such as Max, SuperCollider, or any other software compatible with OSC. Control allows a desktop-based computer program to completely describe its interface to a mobile phone client which then presents the interface as specified. Aside from laying out stock control widgets, Control permits interaction between the widgets as well as creation of new widgets by adding JavaScript code. Control obviates manual network configuration by automatically discovering devices running Control on the same network.

Mira, an iPad application, dynamically replicates the interface of desktop-based Max/MSP programs, joining conventional music software development with touch interaction [73]. Pure Data [57] has seen use across a range of portable devices, from early mass-market portable devices like the iPaq personal digital assistant and the iPod [28, 29] to more recent devices running iOS and Android mobile operating systems [6]. These efforts have typically utilized Pd as an audio backend to a purpose-built performance interface, without the ability to directly program on the device itself. MobMuPlat is a software application that generalizes this concept, allowing user-generated Pd patches to be loaded alongside a definition for a custom user interface system [33]. The Pure Data script can be modified normally through its standard desktop editor, and the custom interface can be edited with a desktop application accompanying the mobile application.

TouchOSC, Control, Mira, MobMuPlat, and similar tools utilize existing programming frameworks for desktop computers to build musical systems for mobile and touchscreen-based devices that don’t natively support these programming frameworks. This is an effective strategy for creating mobile music experiences in a way that leverages an artist or engineer’s existing skill set with a desktop-based programming system. On the other hand, these systems avoid the challenging question of how one might develop sophisticated musical software on the device itself, without being attached to a desktop computer. Perhaps this is to their benefit; it is not immediately evident that an existing programming system like Max or Reaktor would translate well to an mobile touch environment.

The JazzMutant Lemur,9 in the words of Roberts [59], effectively “invented the market” of “multitouch control surfaces geared towards use in the arts.” The Lemur, now discontinued from production, was a dedicated hardware device that provided a variety of on-screen controls that emitted MIDI or OSC over an ethernet connection when activated. The interface could be arranged using a companion editor that ran on desktop software; available controls included the conventional knobs, buttons, and sliders, as well as a two-dimensional “Ring Area” and a physics-based “Multiball” control [66]. The Lemur was notable for having been used widely in popular music by such diverse artists as Daft Punk, Damian Taylor (performing with Björk), Richie Hawtin, Einstürzende Neubauten, Orbital, and Jonathan Harvey.10 The Lemur concept has since been resurrected as a software application for mobile phone and tablet11 that connects its diverse set of controls to other creative software via OSC and MIDI.

The reacTable [35] introduced a large-format, multi-user table that leveraged touch input in addition to interacting with physical objects placed on its surface (Figure 1.13, left). The reacTable operated by using a camera to track perturbations of projected infrared light on the table’s surface, corresponding to human touch input. This camera could also detect the presence of physical tokens on the screen tagged with fiducial markers on the surface facing the screen (Figure 1.13, right). A reacTable user can create musical output by placing objects corresponding to various audio generators, effects, and controls on the table. The reacTable software automatically links compatible objects, such as a loop player and a delay effect, when they are moved or placed in proximity to each other. Up to two parameters can be controlled directly for each object by turning the physical token in either direction or by swiping a circular slider surrounding the token. Additional parameters are exposed through a popup dialog that can be activated by an on-screen button; for instance, filters can be swept in frequency and adjusted in Q by dragging a point around in two-dimensional space. This arrangement accommodates a variety of physically befitting gestures; lifting a token from the screen removes it entirely from the sound processing chain, and swiping the connection between two objects will turn its volume up or down. The reacTable easily handles multiple performers, and in fact its size and the quantity of individual controls seems to encourage such uses. Furthermore, the reacTable’s distinctive visual design and use of light and translucent objects on its surface create an alluring visual aesthetic. At the same time, the dependence on freely moving tangible objects abandons some of the advantages of a pure-software approach, such as easily saving and recalling patches or encapsulating functionality into modules. The reacTable website documents a save and load feature,12 but it is not clear if or how this interacts with physical objects that are placed on the screen. Jordà indicates that the modular subpatch creation was achieved in a prototype of reacTable, but this feature does not seem to have made it to a current version.

Jordà has stated that an explicit design goal of reacTable was to “provide all the necessary information about the instrument and the player state” [35]. In addition to displaying the two directly controllable parameters for each object, the reacTable provides a first-order visualization of each object’s effect on the audio signal path by displaying its output waveform in real-time. Objects that generate notes or other control information display pulses corresponding to magnitude and quantity.

The reacTable concept and software was later released as an application for mobile phone and tablet13 called “Reactable Mobile” (Figure 1.14). Reactable Mobile replaced physical tokens with on-screen objects that could be dragged around the screen and connected, mirroring much of the functionality of the original reacTable. ROTOR14 developed these ideas further on commercially available tablet computers, in particular offering a set of tangible controllers that could be placed on-screen to interact with objects within the software.

Davidson and Han explored a variety of gestural approaches to musical interaction on large-scale, pressure-sensitive multitouch surfaces [17]. These included pressure sensitive interface controls as well as more sophisticated manual input for deforming objects or measuring strain applied to a physical modeling synthesis.

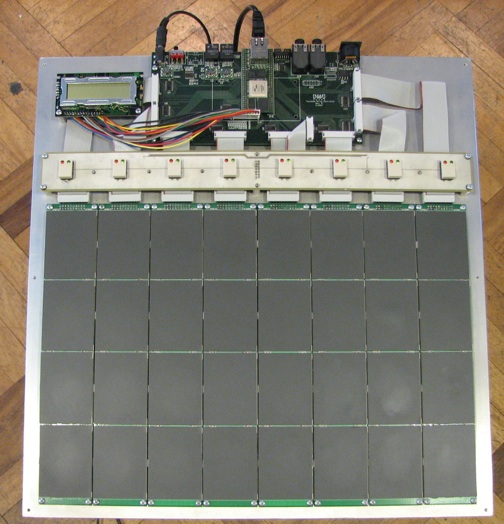

There is a rich history of electronic musical control interfaces that are touch-sensitive but do not have a screen. The Buchla Thunder MIDI controller (Figure 1.15, left) included a number of pressure and location sensing touch-plates in a strikingly unconventional arrangement [10]. The Thunder extended the the touch-plate interface common in Buchla’s modular synthesis instruments [5] to the field of standalone digital musical interfaces. David Wessel’s SLABS (Figure 1.15, right), developed with Rimas Avizienis, extended the touch sensitive pad musical interface further, providing 24 pads that sensed two-dimensional position as well as vertical force; each pad was sampled at high frequency and applied to sound synthesis at audio rate [91].

Early developments in touch input in the context of computer music is associated with the research of William Buxton, who with Sasaki et al. developed a capacitive touch sensing tablet and used as a simple frequency-modulation synthesizer, as a percussion trigger, and as a generalized control surface for synthesis parameters [62]. Interestingly, these researchers seem to regard their FM synthesizer merely as a test program, ignoring the possibility that this could be a new kind of musical instrument. In early research on acoustic touch and force sensitive displays, Herot and Weinzapfel hint at the desire to directly engage with virtual objects not only at a visual level but to “convey more natural perceptions” of these objects, specifically weight [31].

UrMus is a framework for prototyping and developing musical interactions on mobile phones [21]. UrMus includes a touch-based editing interface for creating mappings between from phone’s sensor inputs to audio and visual outputs. In this sense, it is one of the only systems at this time that attempts to engage mobile music programming entirely on the mobile device itself. UrMus also embeds a Lua-based scripting environment for developing more intricate mobile music software interactions.

In the domain of general purpose computing, TouchDevelop is a touch-based text programming environment designed for use on mobile phones, in which programming constructs are selected from a context-aware list, reducing the dependence on keyboard-based text input [74]. Codea is a Lua-based software development system for iPad in which text editing is supplemented with touch gestures for parameter editing and mapping.15

Handwriting Input for Computing and Music

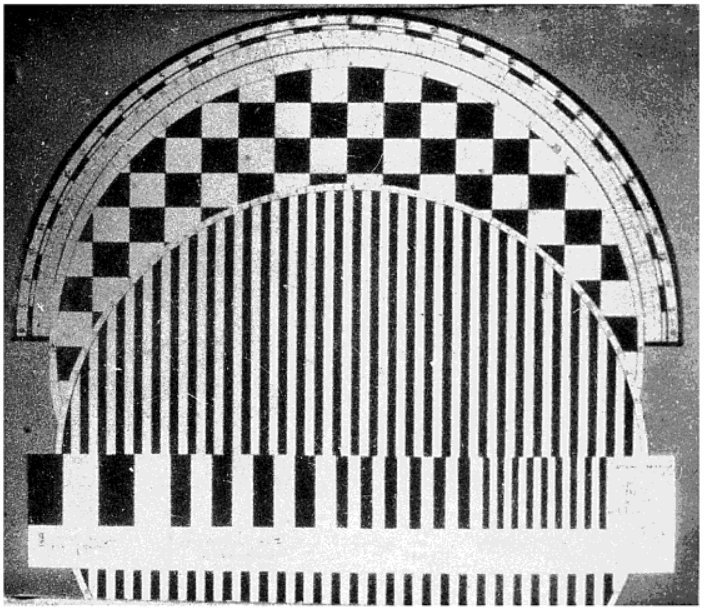

Beginning in the early 20th century, the desire to construct sound by hand through technological means can be seen in the work and experimentation of a variety of artists, musicians, and technologists. Early experiments in manually inscribing sound forms into gramophone records were evidently conducted by the artist László Moholy-Nagy and composers Paul Hindemuth and George Antheil, independently of one another, but the results of these experiments were never released or formalized into musical works [40]. Anticipating these explorations, Moholy-Nagy proposed the creation of a so-called “groove-script” for emplacing synthetic sounds into the grooves of a record, the possibilities of which would represent “a fundamental innovation in sound production (of new, hitherto unknown sounds and tonal relations)” [50, 52].

Later, following the inception of optical encoding for cinematic film sound, Moholy-Nagy called for “a true opto-acoustic synthesis in the sound film” and for the use of “sound units [...] traced directly on the sound track” [40, 51]. Oskar Fischinger and Rudolph Pfenninger, having extensively studied the visual forms of optically-encoded sounds, both released works incorporating meticulously drawn graphics that were photographed and then printed to the sound track of motion picture film; the latter artist referred to his practice as tönende Handschrift (Sounding Handwriting) [40].

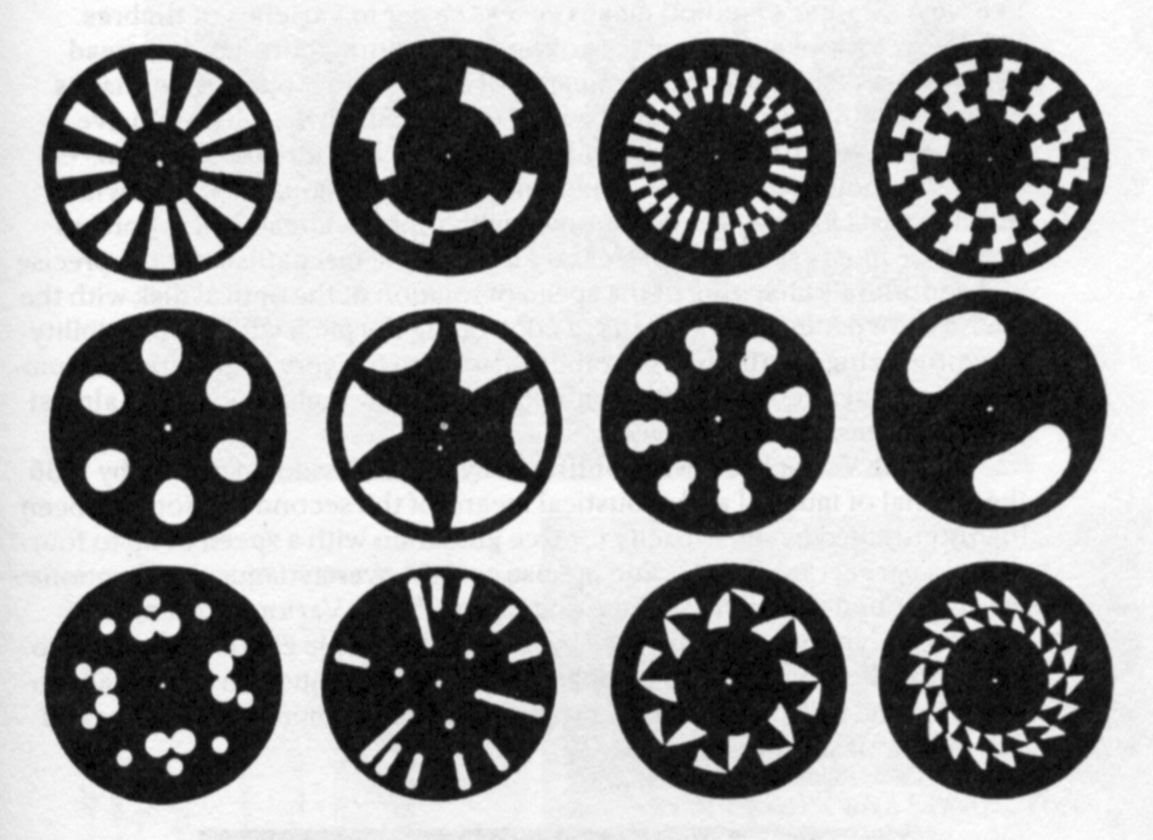

Almost simultaneously with Fischinger and Pfenninger’s efforts, research in converting hand-made forms to sound recorded on optical film was being conducted by a number of Russian musicians and inventors, including Arseny Avraamov, Evgeny Sholpo, Georgy Rimsky-Korsakov, and Boris Yankovsky [34]. These efforts led to Sholpo’s development of the Variophone, which modulated waveforms drawn on rotating discs to enable control of pitch, timbre, and volume (Figure 1.16, left) [68]. Yankovsky’s Vibroexponator utilized a rostrum camera and other optomechanical mechanisms to compose tones of varying pitch from a library of hand-drawn spectra and amplitude envelopes (Figure 1.16, right) [68].

Animator Norman McLaren composed soundtracks for his video works by directly drawing on the sound track portion of the film surface [36, 46] or by photographing a set of cards with sounds hand-drawn on them [47]; the latter technique was employed in the soundtrack for his Academy Award-winning short film Neighbours (1952) [36].

The ANS Synthesizer was an optoelectrical additive synthesizer that generated waveforms from pure tones, representing a range of frequencies, machine-inscribed on a rotating glass disc [38]. A musician would compose with these tones by drawing freehand on a plate covered in mastic that was aligned with the disc, with the horizontal dimension representing time and the vertical dimension representing frequency (Figure 1.17). These strokes would cause gaps in the mastic’s coverage of the plate, and a photoelectric system would synthesize the pure tone waveforms at frequencies corresponding to these gaps’ positions.

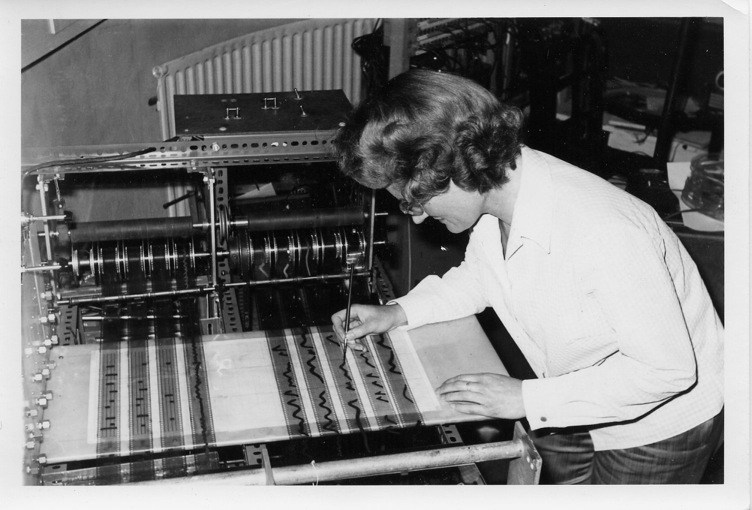

The Oramics machine, created by composer and sound engineer Daphne Oram, comprised ten loops of optical film which were drawn onto by the machine’s operator (Figure 1.18) [32]. Each strip was responsible for controlling a separate synthesis parameter, including waveform shape, duration, vibrato, timbre, intensity, amplitude, and frequency. Oram suggested that, using the Oramics system, a composer could learn “an alphabet of symbols with which he will be able to indicate all the parameters needed to build up the sound he requires.” In light of the system’s method of use and its characteristic musical results, Hutton has suggested that “Oram was more motivated to explore the technological process relating to the sound” rather than purely by music composition [32].

Early research in direct, graphical manipulation of digital data via pen or stylus, augmented by computer processing, can be seen in Sutherland’s Sketchpad [72]. In Sketchpad, a light pen is used to control the generation of geometric structures on a computer screen. Pen input is used to define the visual appearance of these structures, such as the number of sides of a polygon or the center point of a circle. Imprecise pen strokes of the user are converted into straight lines and continuous arcs; rather than encoding the direct representation of the user’s gestures, Sketchpad translates user input into an assemblage of predefined geometric symbols (Figure 1.19). These symbols are parameterized in shape, position, size, and other properties to match user input in a structured fashion. Pen input is also used to describe geometric constraints applied to these structures by the software. As further input modifies a parameter of a structure, dependent constraints can be automatically updated as needed. For example, if a given structure is moved in the viewing plane, other objects anchored to it will move in tandem. In this way, both the visual appearance of the system and an underlying set of semantics are encoded using pen input, forming a framework for a kind of visual programming.

Similar historical research in pen input for programming is evident in GRAIL and the RAND Tablet [18]. The RAND Tablet consisted of a CRT screen, a flat tablet surface mounted in front of it, and a pen whose position could be detected along the tablet. The RAND Tablet found a variety of uses including recognition of Roman and Chinese characters [90] and map annotation. The GRAIL language was a programming system for the RAND Tablet which enabled the user to create flowchart processes through the “automatic recognition of appropriate symbols, [allowing] the man to print or draw appropriate symbols freehand” [20]. It is not clear what specific applications these flowchart operations were intended for, but a later system called BIOMOD applied these concepts to biological modeling [30].

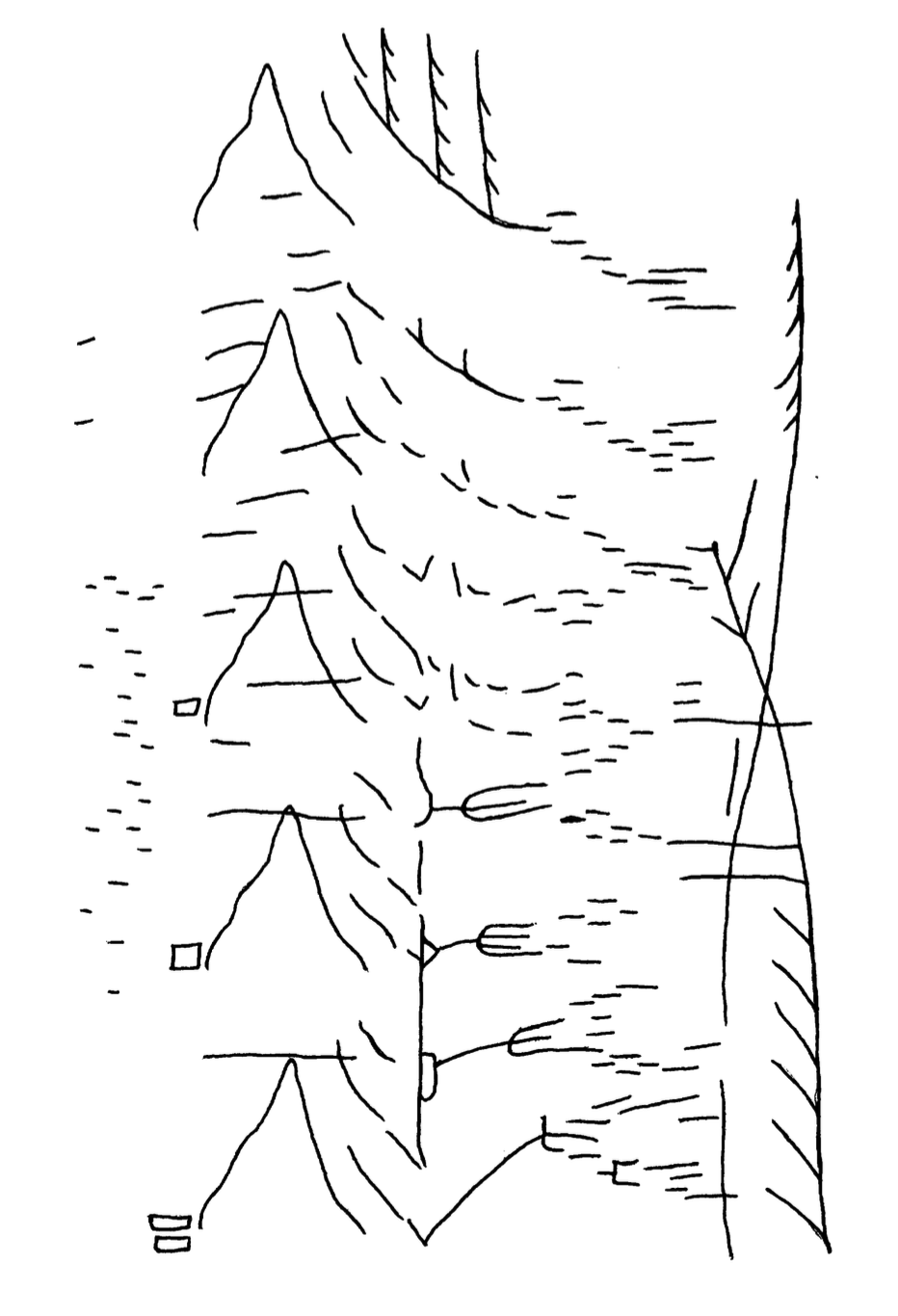

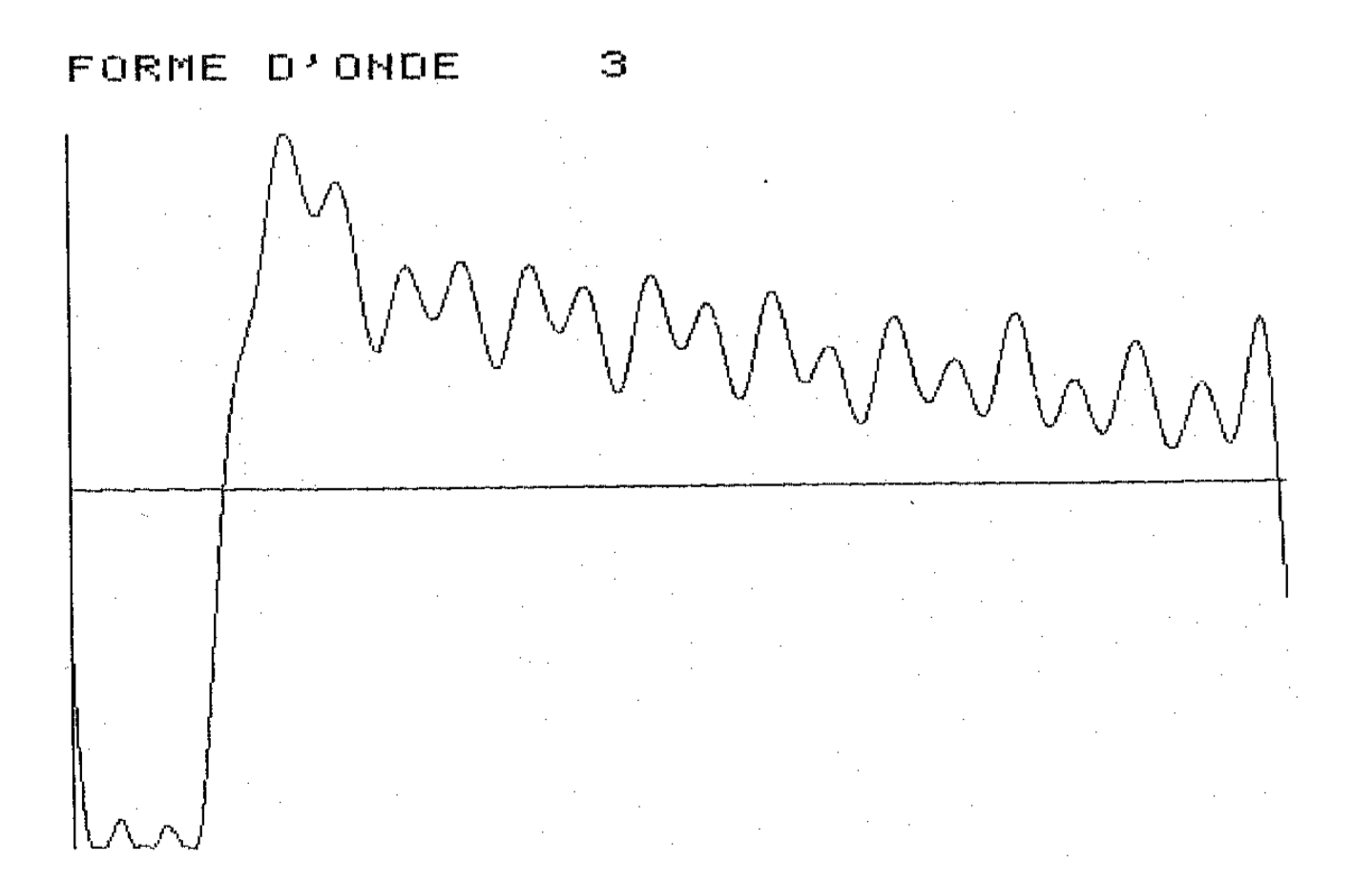

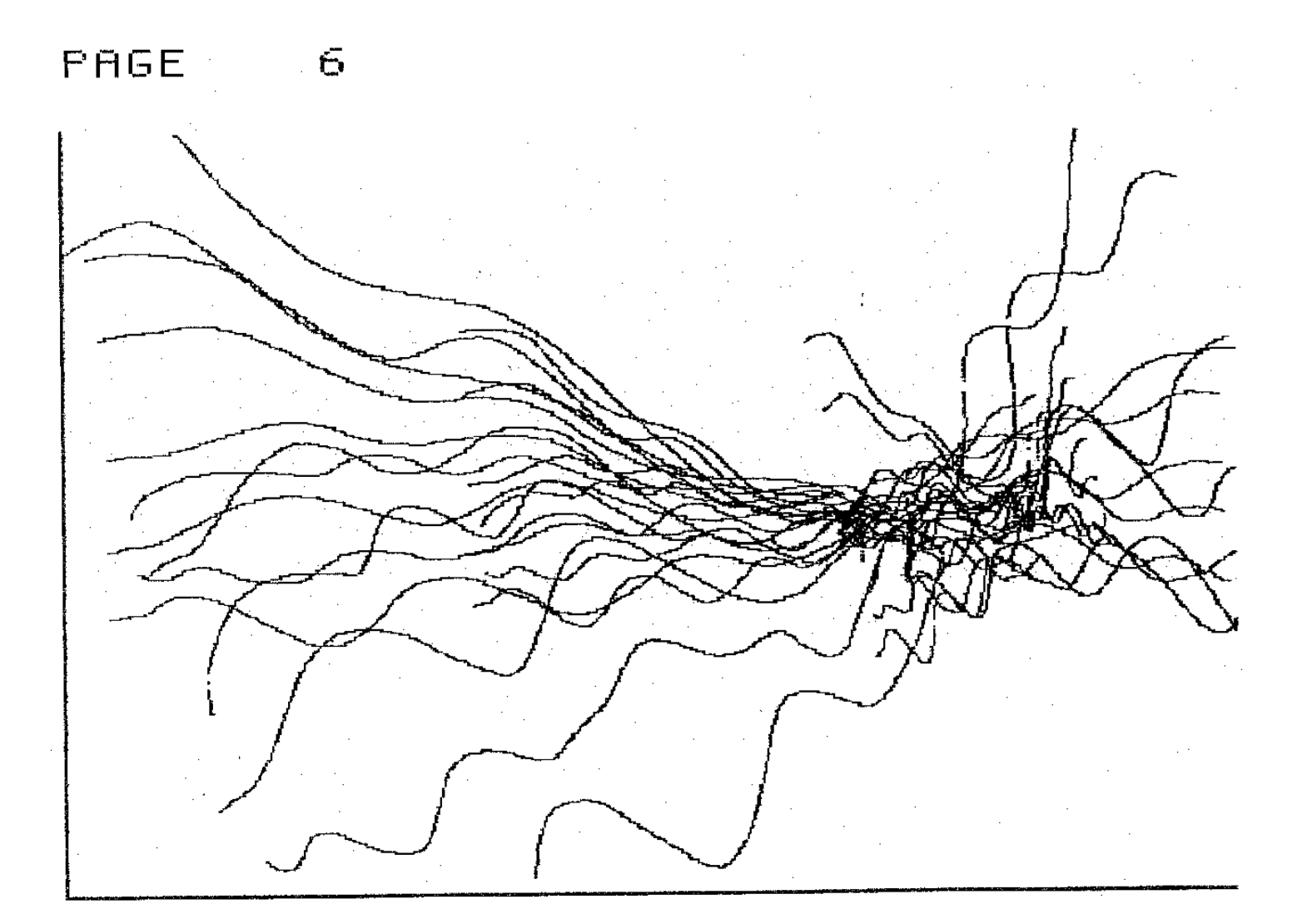

The UPIC, designed by Iannis Xenakis, was a digital music system comprising a computer, a digitizing tablet, and two screens, and was intended to sustain compositional activities at both the micro and macro levels [41]. Using the tablet, a composer could freely draw any desired cyclical waveforms and amplitude envelopes; these were visualized on-screen and made available for synthesis. Using a similar procedure of drawing strokes on screen, the composer could then direct these waveforms, with the vertical position corresponding to frequency and the horizontal corresponding to time (Figure 1.20). Separate displays were used for drawn graphical and alphanumeric text rendering, and a printer was also available to print the graphics. Xenakis used the UPIC to develop his work Mycenae-Alpha [71]. The ideas underlying the UPIC were later extended to Iannix, a software application for conventional desktop computers that features graphic score notation and playback [11].

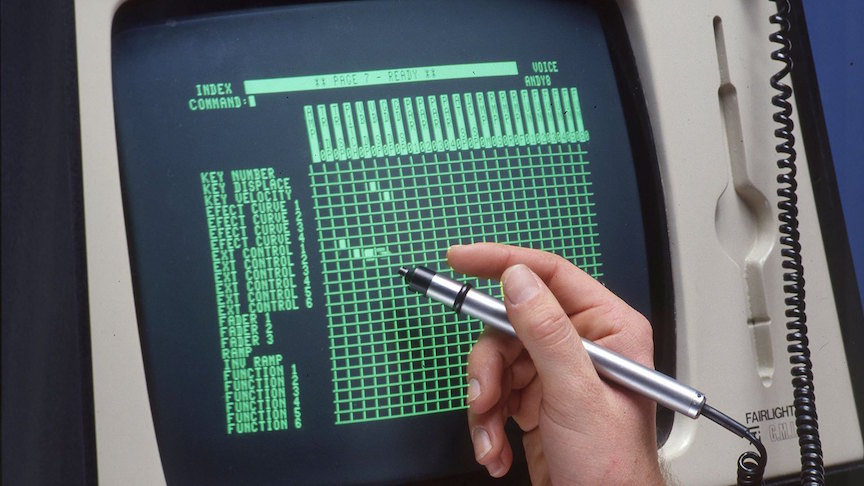

The Fairlight CMI digital music workstation came equipped with a light pen that could be used to draw on-screen waveforms to be synthesized, adjust partial amplitudes of an additive synthesizer, or modify other control parameters of the synthesis system [43] (Figure 1.21). Later versions of the CMI relocated the pen input to a screen-less tablet next to the computer’s keyboard, reportedly to address issues related to user arm fatigue from holding up the pen to the screen.16 New England Digital’s Synclavier was a similar dedicated computer music workstation in use at this time, integrating hardware, software, a musical keyboard, and a control panel to enable real-time music composition, performance, and education [3].

A number of research efforts have explored machine recognition of handwritten Western music notation. Fujinaga explored image-based recognition of music notation using a nearest-neighbor classifier and genetic algorithm [24–26]. Buxton et al. developed a number of score editing tools for the Structured Sound Synthesis Project, including a basic two-dimensional pen gesture recognizer for input of note pitch and duration [8]. The Music Notepad by Forsberg et al. developed this approach further, allowing for pen input of a rich variety of note types and rests, as well as pen gesture-based editing operations like deletion, copying, and pasting [23]. Miyao and Marayuma combined both optical and pen gesture approaches to improve the overall accuracy of notation recognition [49].

Landay’s research in interface design led to the development of SILK, an interactive design tool in which interface items sketched by a user are recognized and dynamically made interactive [39]. Individual interface “screens” constructed in this way can then be linked by drawing arrows from an interactive widget to the screen it activates. This approach greatly reduces the inefficiency in conceptualizing, prototyping, and validating a user interface by combining these processes into one. PaperComposer is a software interface for musicians to design a custom, paper-based interface [27]. As one sketches over the printed interface with a digital pen, these interactions are digitized and linked back to the digital representation.

Commercially available graphics input tablets, such as those manufactured by Wacom, have found broad use in computer music composition and performance. Graphics tablets provide a wealth of constant sensory data applicable to musical control, including three-dimensional position relative to the tablet surface, two-dimensional tilt angle of the pen, rotation of the pen, and pen pressure [55]. Mark Trayle developed a variety of techniques for utilizing stylus input in performance [9]. In some cases, the x and y positions of the pen, its pressure, and its angle of tilt would each be mapped to synthesis parameters, creating a direct interface to sound design and an instrument aiming for “maximum expression.” Often Trayle would directly trace graphic scores produced by his frequent collaborator Wadada Leo Smith. Other modes of graphics tablet use employed by Trayle would divide the tablet panel into multiple zones, with some zones mapped to direct control of a synthesis algorithm and other zones controlling on-going synthesis processes.

Wright et al. developed a variety of tablet-based musical instruments, including a virtual tambura, a multi-purpose string instrument, and a tool for navigating timbres in an additive synthesizer [93]. Wessel and Wright discussed additional possibilities using graphics tablets, such as two-handed interaction involving a pen and puck mouse used on the tablet surface, direct mapping of tablet input to synthesis parameters, dividing the tablet surface into multiple regions with individual sonic mappings, treating the tablet surface as a musical timeline, and the entry of control signals or direct audio data that are buffered and reused by the underlying music system [92]. The use of graphics tablets by other digital musicians has extended to drawing of a dynamic, network-synchronized graphic score [37], control of overtone density in a beating Risset drone [67], bowing of a virtual string instrument [65], and parameterizing the playback of a stored audio sample through both conventional and granular means [79].

Summary

We have presented a variety of previous research and thought related to interactive music programming, music computing for mobile touchscreen technologies, and handwriting-based musical expressivity. Several common threads can be found in these collective efforts. One is a tension between high-level and low-level control. While many systems have sought to control higher levels of musical abstraction with gestural control, others have held on to direct mappings of synthesis parameters. Another is secondary feedback given about the system’s state; many of the systems discussed in this chapter provide additional visual information to represent the internal processes responsible for the resulting sound. At the core of much of this work there seems a desire to find new sources of creativity latent in emerging technology.

References

Copyright (c) 2017, 2023 by Spencer Salazar. All rights reserved.